As applications and databases grow larger and more complex to meet expanding business needs, database performance often becomes a critical issue impacting production systems. If databases slow to a crawl, applications grind to a halt, users become frustrated, and revenue-impacting outages can occur. We database administrators (DBAs) often get pulled into urgent war rooms asking why the database is running slow. While some performance issues result from inadequate infrastructure in terms of CPU, memory, I/O, or network capacity, oftentimes databases just need some tuning adjustments. I outline the top 10 databases tuning best practices DBAs need to familiarize themselves with and diligently apply in both existing production databases and new development databases before they reach production. Applying these database tuning techniques can optimize SQL query performance, reduce expensive I/O operations, improve index usage, and avoid performance pitfalls—ultimately improving database speed and scalability.

1. Keep Statistics Up To Date

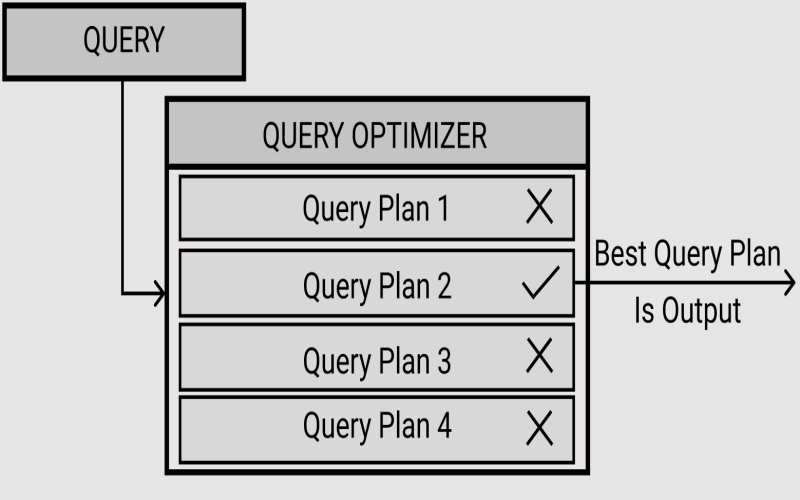

The query optimizer relies on table and index statistics to determine the optimal query plan to execute based on factors like the estimated number of rows, data distribution, and index selectivity. When these statistics become outdated, the optimizer can make poor choices leading to dangerously inefficient query plans. This warrants updating statistics regularly as data changes—at least weekly if not daily for busy applications. DBAs should also analyze statistics after major data changes like bulk loads or partition switching.

2. Avoid Using Leading Wildcards

Leading wildcards at the start of search conditions often severely impact SQL performance. For example, the following searches require full table scans because SQL cannot utilize indexes efficiently: LIKE ‘%test’ or LIKE ‘%12345’. Removing the leading wildcard can enable index seeks over scans. Dynamic full-text search functionality can also help accelerate relevant wildcard searches through dedicated text indexing. DBAs need to be on the lookout for leading wildcards in queries and review with developers whether syntax adjustments or other search approaches might achieve the desired functionality more efficiently.

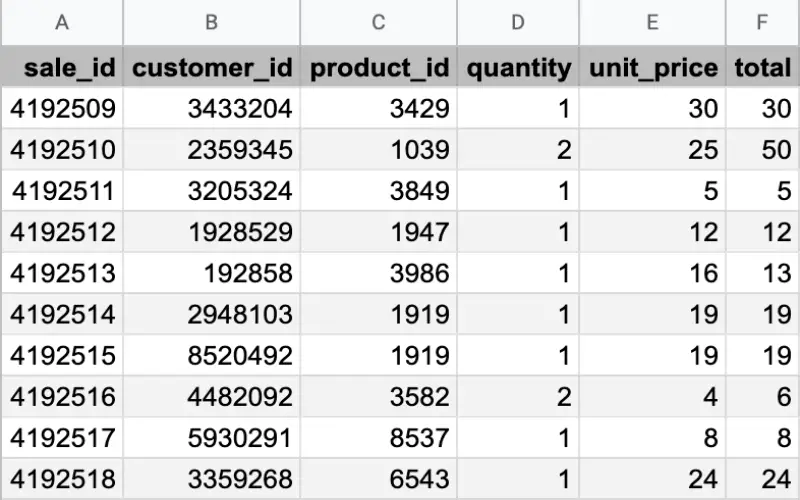

3. Avoid SELECT *

While convenient for ad-hoc querying, using SELECT * detrimentally affects performance in production queries by pulling unnecessary columns. This burdens IO, memory, and network resources. It also often prevents covering indexes where an index contains all the columns referenced in a query. Instead, queries should SELECT just the specific columns needed. If new columns get added to tables over time, SELECT * queries will unknowingly pull those too. SQL hints like SELECT * NOLOCK can also impact concurrency by preventing shared locks. DBAs should actively discourage SELECT * in favor of explicitly listing columns across development and production systems. Exceptions can made for generic reporting queries if testing proves SELECT * works reasonably.

4. Use Constraints

Constraints like NOT NULL, CHECK, UNIQUE, PRIMARY KEYS, and FOREIGN KEYS enforce data integrity which optimizes database performance over time. Constraints catch data issues within transactions before they commit rather than leaving data anomalies for SQL queries to deal with later. For example, a foreign key constraint guarantees referential integrity between parent-child tables which keeps queries joining these tables running cleanly. Constraints also enable the optimizer to leverage metadata for better execution plans and enable partial covering indexes.

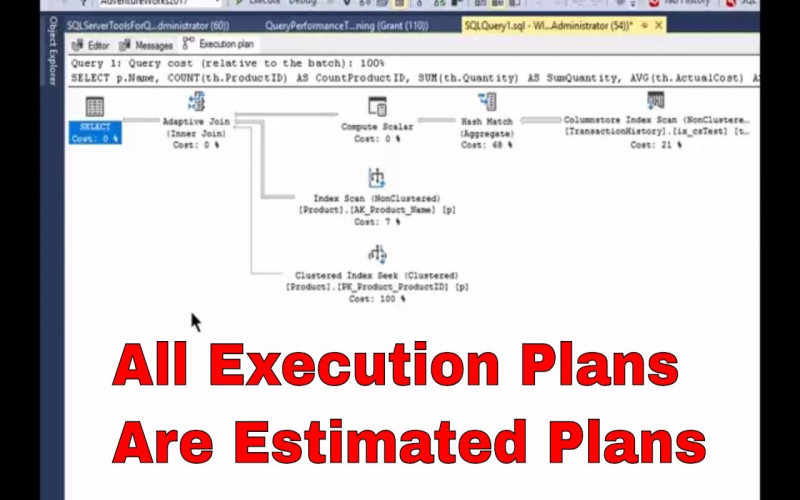

5. Look At The Actual Execution Plan, Not The Estimated Plan

By just looking at the estimated execution plan, DBAs risk missing sizable discrepancies between SQL’s expectations and actual runtime behavior after it compiles and executes a query. The estimated plan provides expectations around the number of rows, memory usage, and IO statistics which may deviate heavily from reality. Reviewing the actual execution plan instead reveals the full picture including actual row counts, index usage, swallowed sorts, and warranty sorts which indicate how far off the estimates proved. DBAs can compare the relative costs side by side to identify optimizing opportunities and refresh statistics accordingly.

6. Adjust Queries By Making One Small Change At A Time

It often takes multiple adjustments over multiple tests to properly optimize a slow or complex query. The key is to avoid changing too many variables at once. For example, if you add or alter an index, expand memory, and rewrite the query logic all within the same test, you lack precision on which change actually improved or possibly even hindered performance. It also becomes exponentially harder to roll back changes safely. Instead best practice warrants adjusting queries with careful precision by making distinct, small changes in each subsequent test.

7. Adjust Indexes To Reduce I/o

Index tuning warrants ongoing attention because suboptimal indexes slow down queries by inflating reads and writes. Seeking through indexes should outperform scanning entire tables, so indexes essential to common queries require regular analysis. Consider indexing by the query filter conditions, especially on foreign key columns frequently joined across tables. Analyze whether indexes aid your most frequent and expensive queries but avoid over-indexing which adds overhead. Where asymptotic analysis reveals support for prefixing, consider expanding indexes by INCLUDE columns, narrowing filtered columns with keys, or removing redundant indexes.

8. Analyze Query Plans

I reference analyzing plans throughout these tips because reviewing query plans ranks as one of the highest-value database tuning techniques. Plans diagram how SQL executes a query including iterator flows, joins, aggregates, sorts, spools, etc. DBAs need fluency in interpreting plan operations. Plans also reveal vital performance metrics like I/O statistics that quantify expensive scans and seeks. Compare the costs of different plans to see how adjustments impact efficiency. Analyze parallelism and bottlenecks like sorts spilling to tempdb.

9. Familiarize Yourself With Your Workload

Transactional databases running millions of real-time user queries, analytical databases processing daily batches of business intelligence reporting, and operational databases feeding key websites all present very different workloads. Workload analysis should factor heavily into database tuning by revealing usage trends, resource consumption patterns, and performance hotspots specific to your environment. Capture workloads using SQL Profiler traces or Query Store that collect running SQL over time. Import these into tools like SQL Sentry Plan Explorer for visualization.

10. Implement Software Tools

Database tuning manually reviewing plans query-by-query only scales so far. DBAs need software tools that automate analysis across environments. SQL Sentry Plan Explorer continually collects production workloads and then visually flags performance issues through wait stats, deadlocks, alerts, and Top SQL dashboards. Built-in Enlightenment recommendations analyze collected query plans and suggest indexing, code, and statistics improvements. DBAs can implement experiments safely through Plan Explorer and then measure production efficacy afterward with benchmark tests.