What Is Natural Language Processing?

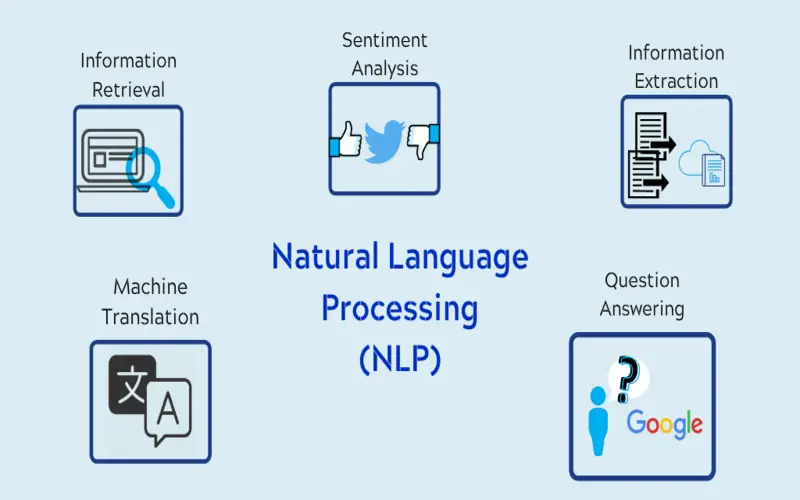

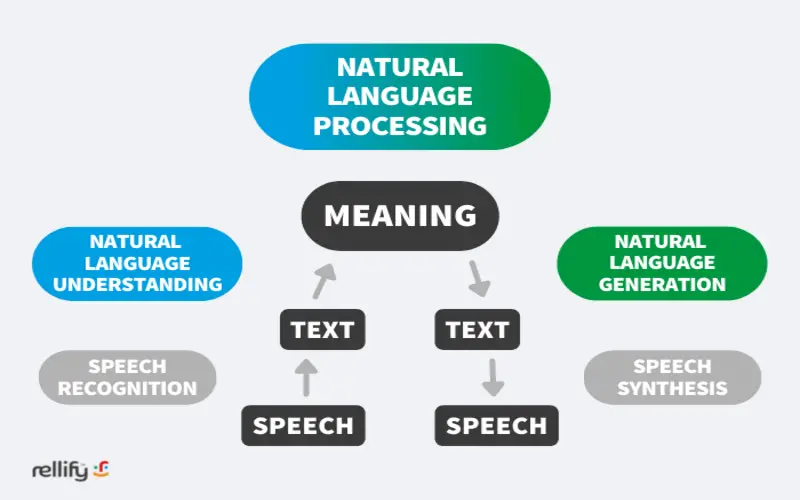

Natural language processing involves many distinct techniques for interpreting human language, differing from statistical and machine-learning strategies to rules-based and algorithmic reaches. We need a deal array of reaches because the text- and voice-based data varies universally, as do the practical applications. In standard terms, NLP duties break down language into smaller, elemental pieces, try to appreciate relationships between the sections, and explore how the sections work together to generate meaning.

How Does Natural Language Processing Work?

NLP has various techniques that enable it to observe functions to work. It runs by decoding the strategy for efficiently analyzing human language designed. It ranges numerical features from an algorithm-based reach. After, NLP runs identifying text-voice-based variables. This process is a sensible example. If you have smart devices, you can operate them with a smartphone. So, then if you say, “Hey Google, turn on the lights.” The assistant will observe the request and perform its actions.

Some of the top Benefits of NLP involve:

- Being able to operate large-scale data evaluates.

- Cost minimized and streamlining of multiple processes.

- Maximizing user satisfaction.

- In-depth marketing appreciation.

Elevation of communication: NLP enables more natural communication with search apps. NLP can be modified to distinct styles and sentiments, making more convenient user experiences.

Efficiency: NLP can automate lots of duties that generally require people to complete. Examples include text summarization, social media and email evaluation, spam observation, and language translation.

Content curation: NLP can recognize the most related information for individual consumers based on their insights. Understanding context and keywords leads to higher user satisfaction. Creating more searchable data can enhance the efficacy of search tools.

What Is The Future Of Natural Language Processing?

The future of NLP looks promising, with in-progress research in areas such as linguist processing, explainability, and combination with other AI innovations. Here are some potential future developments in NLP.

- Enhanced language understanding: NLP is anticipated to continue to be involved to understand better the refinement of human language, involving sarcasm, irony, and context.

- Maximized personalized: NLP can help offer more personalized recommendations, search results, and user experiences by knowing individual options and natures.

- Integration with developing innovations: NLP can be merged with innovations like augmented and virtual reality to generate even more delightful experiences.

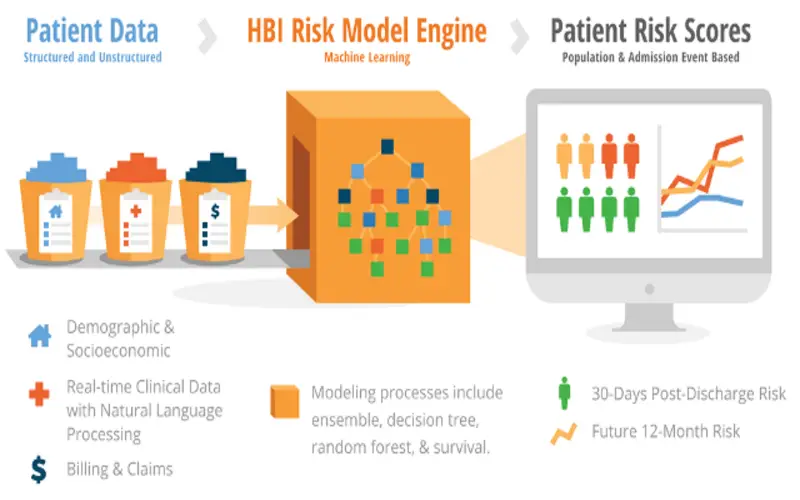

- Extending use cases: As NLP innovation becomes more advanced and available, it will be pre-owned in new and technological ways like healthcare, education, and entertainment.

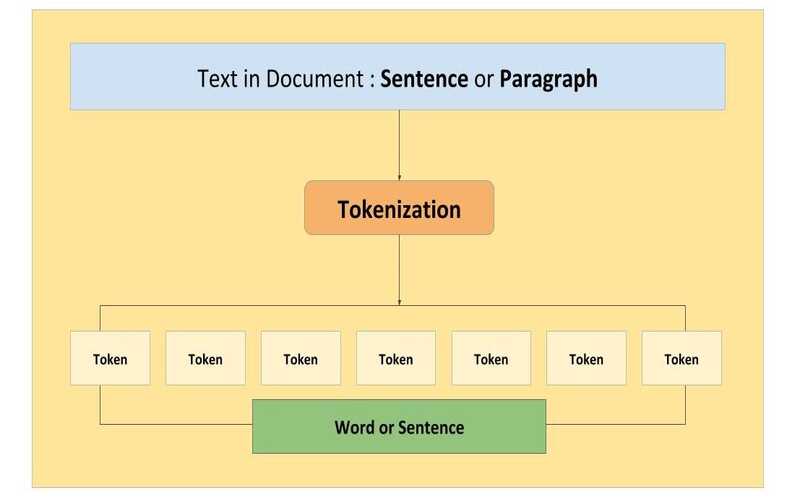

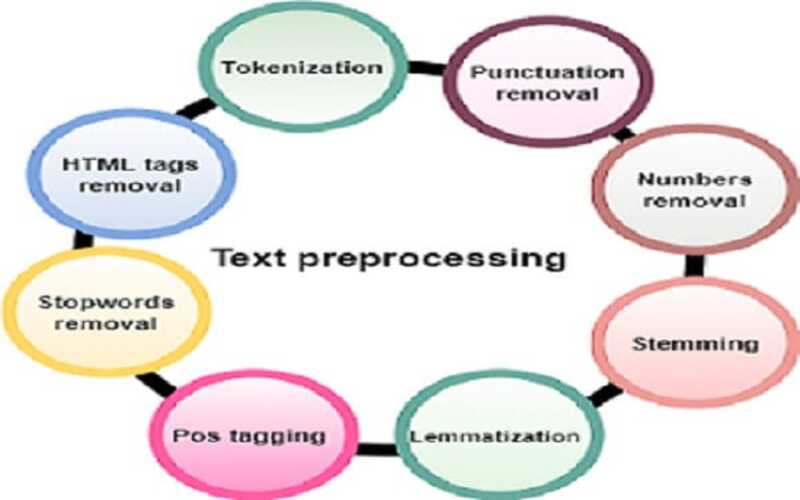

1. Word Tokenization

Word tokenization is the problem of dividing a cord of composed language into its parts words. In English and many other languages using some form of Latin symbols, space is a reasonable estimation of a word divider. We still can have difficulty if we are apart by space to achieve the wanted results. Some English composite nouns are inconsistently written, and sometimes they hold a space. We use a library to obtain the desired effects, so don’t get too bogged down in the intricacies.

2. Semantic Analysis

Focuses on recognizing the meaning of language. However, since language is unsure and obscure, semantics is examined as one of the most challenging areas in NLP. Semantic duties evaluate the structure of sentences, word interactions, and relevant concepts, in an attempt to discover the meaning of words and recognize the topic of a text.

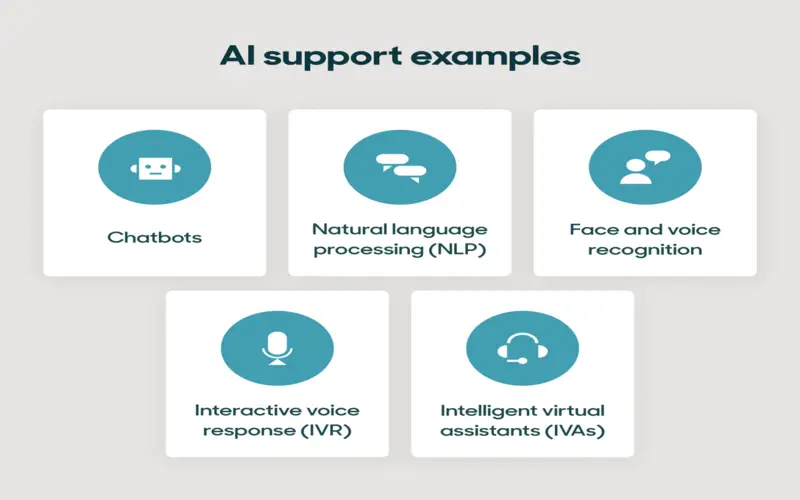

3. Smart Assistants

Think of Siri and Alexa; these virtual intelligent assistants depend on natural language processing to recognize inflection and tone to complete their duties.

4. Predictive Text

It is one of the ultimate examples of natural language processing in action. Things like autocorrect auto absolute are made possible by NLP, which can even learn an individual’s language habits and make ideas based on identical natural patterns.

5. Text Analytics

Natural language processing can evaluate text sources from email to social media posts and beyond to provide companies insights beyond numbers and patterns. NLP text considers changes in unstructured text and conveys actionable and managed data for evaluation using distinct semantic, statistical, and machine-learning techniques.

6. Natural Language Generation

It is a procedure of automatically manufacturing text from organized data in a flexible format with meaning stages and sentences. The difficulty of natural language generation is hard to contribute. It is a division of natural language generation that is hard to contract. It is a division of NLP Natural language generation.

7. Online Smart Chatbots

Online chatbots are computer programs that offer ‘smart’ mechanical clarifications to general user queries. They contain automated design recollect systems with a rule-of-thumb reaction mechanism. They are pre-owned to conduct valuable and meaningful discussions with a single website. Firstly, chatbots are only pre-owned to answer essential questions to reduce call center volume calls and bring swift user support services.

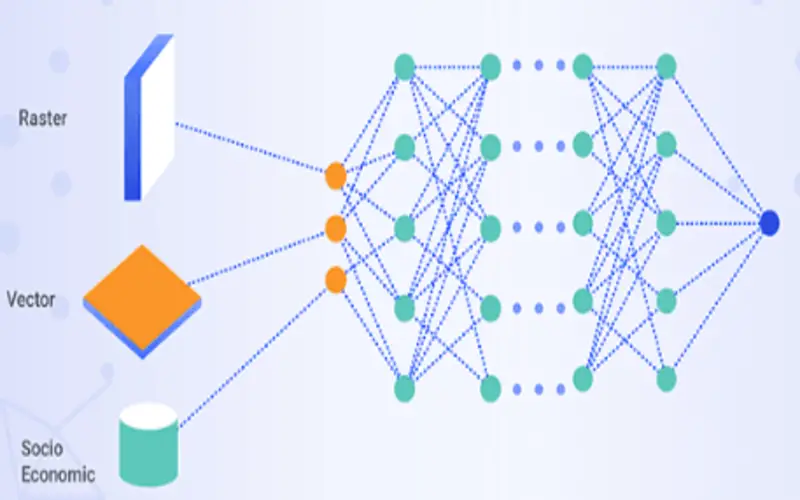

8. Neural Networks And Deep Learning

Recurrent neural networks (RNNs) and long short-term memory (LSTM) networks are pre-owned for duties that require knowledge of the sequence in the data, like machine translation. The converter architecture, and its derivatives like BERT and GPT, have set the latest standards in NLP performance in duties ranging from text summary to question answering.

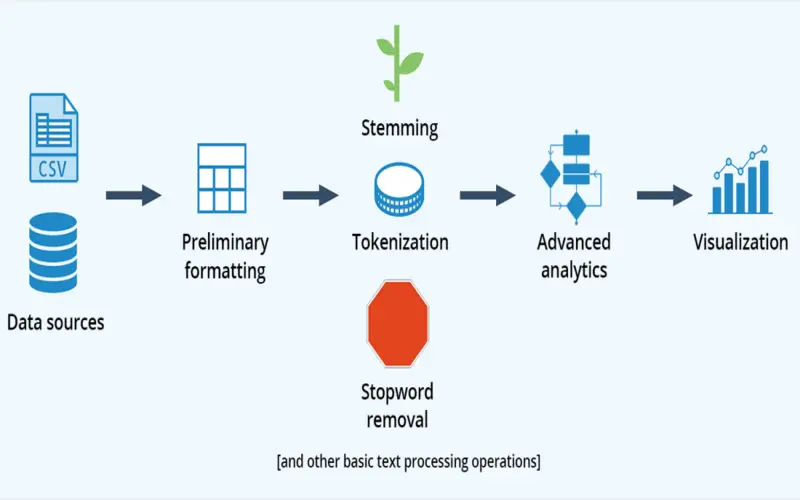

9. Tokenization And Text Preprocessing

Tokenization is an elementary NLP technique, including the breaking down of text into compact units, such as words or stages. These procedures assist in knowledge of the structure of the text and removing meaningful information. Text preprocessing includes duties like stemming, lemmatization, and eliminating stop words to improve the data quality for evaluation.

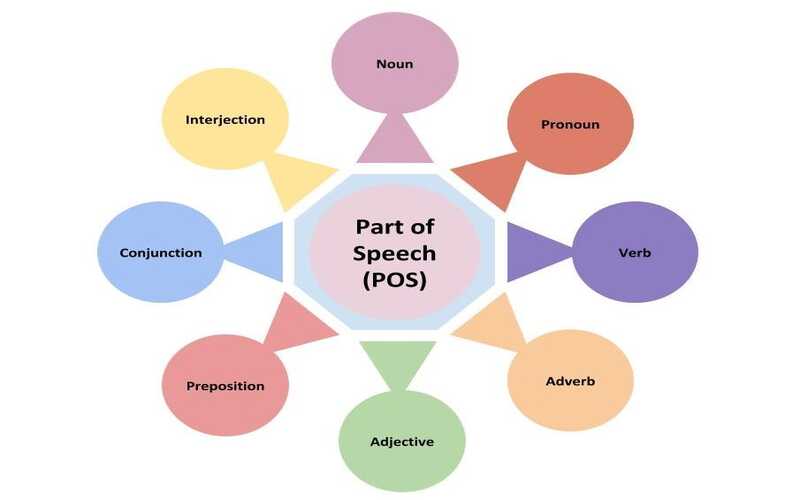

10. Part-of-Speech Tagging

NLP systems use part-of-speech tagging to allocate grammatical groups to each word in a sentence. This information is critical for knowledge of the semantic structure of sentences and removing related information.

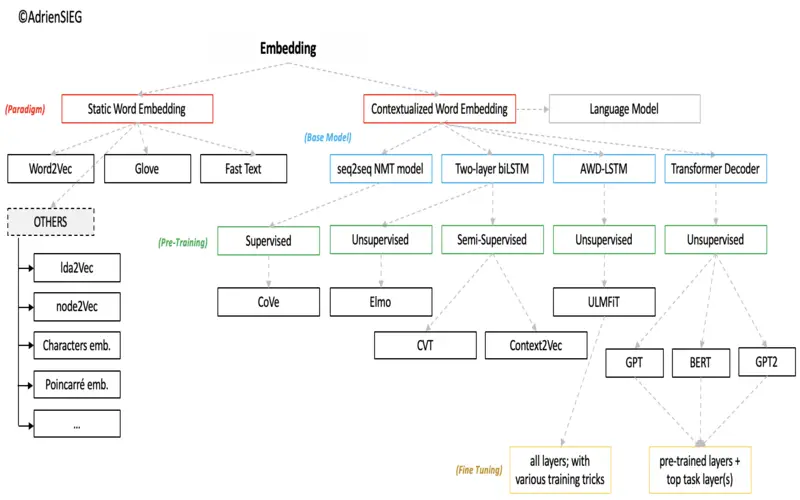

11. Contextual Word Embeddings

Recent advancements in NLP involve the use of background word embeddings, such as BERT. These embeddings catch off in a sentence, leading to more exact language knowledge and enhanced performance in multiple NLP tasks.