What Is Tensor Flow?

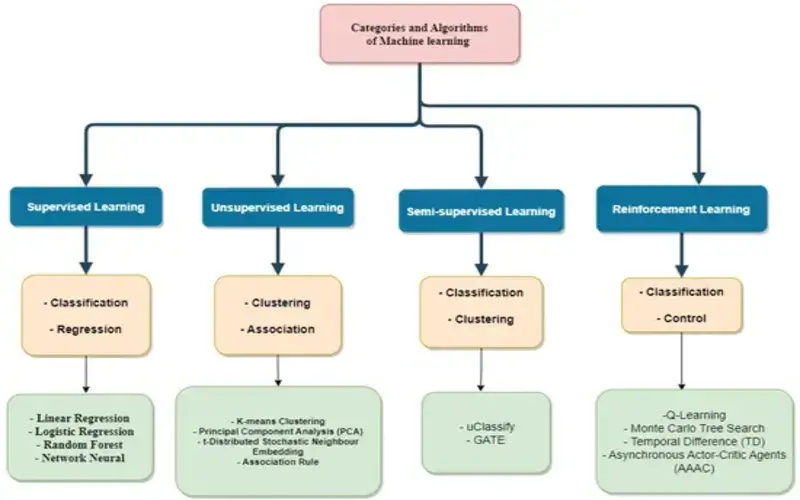

Tensors are the most necessary data types used in all foremost artificial intelligence (AI) structures. A tensor is a container formed to fit our data absolutely while defining the increased size of the tensor. For instance, a processor has a temperature of 45 degrees.

At its core, a tensor is the fundamental object that brings the theory of scalars, vectors, and matrices to higher dimensions. In data science, tensors are multi-dimensional arrays of numbers that present complicated data. These are the necessary data frameworks. They are implemented in machine learning and deep learning frameworks such as TensorFlow and PyTorch.

Why Is It Used?

Tensors are undoubtedly helpful for controlling complicated data such as images, audio, and text, for instance, a colour image can be presented as a 3D tensor with dimensions equivalent to height, width, and colour channels (red, green, blue). However, a video can be presented as a 4D tensor with the time being extra dimension.

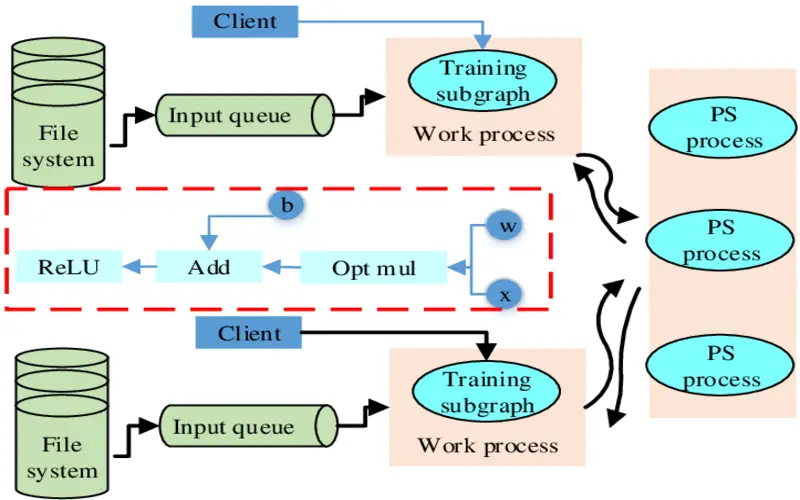

How TensorFlow Works?

Tensor Flow enables you to generate dataflow graphs that explain how data moves through a graph. The graph consists of nodes that represent an arithmetic operation. An interrelation or edge between nodes is a multidimensional data array. It takes inputs as a multi-dimensional array where you can build a flowchart of details that can performed on these inputs.

Some Key Features Of Tensor flow

- Effectively works with arithmetical expressions, including multi-dimensional arrays.

- Better support of deep neural networks and machine learning theory.

- Huge scalability of computation across machines and high data sets.

- GPU/CPU computing allows for the use of the same code across both structures.

Together, these features make TensorFlow the perfect structure for machine intelligence at a manufacturing scale.

1. Data Flow Graphs

In TensorFlow, computation is explained using data flow graphs. Each node in the graph represents an example of an arithmetic operation (addition, division, or multiplication), and each edge is a multidimensional data set on which the operations are performed.

As TensorFlow works with computational graphs, they are organized where each node presents the launching of an operation where each detail has zero or more inputs and zero or more outputs.

Edges in TensorFlow can be collected in two categories: Normal edges modify data structure by allowing the output of one detail to become the input for another operation. Special edges, which are pre-owned to handle responsibilities between two nodes to set the order of assignment where one node waits for another to complete.

2. Prepare And Load Data For Successful ML Results

Data can be the most essential factor in the success of your ML aspiration. TensorFlow provides various devices to assist you combined, cleaning, and preprocessing data at scale:

- Traditional datasets for first training and validation.

- Highly scalable data pipelines loading data.

- Preprocessing formats for general input transformation.

- Devices to validate and transform large datasets.

Alternatively, responsible AI devices can help you detect and remove bias in your data, resulting in fair, ethical findings from your models.

3. Construct And Fine-tune Models With The Tensorflow Ecosystem

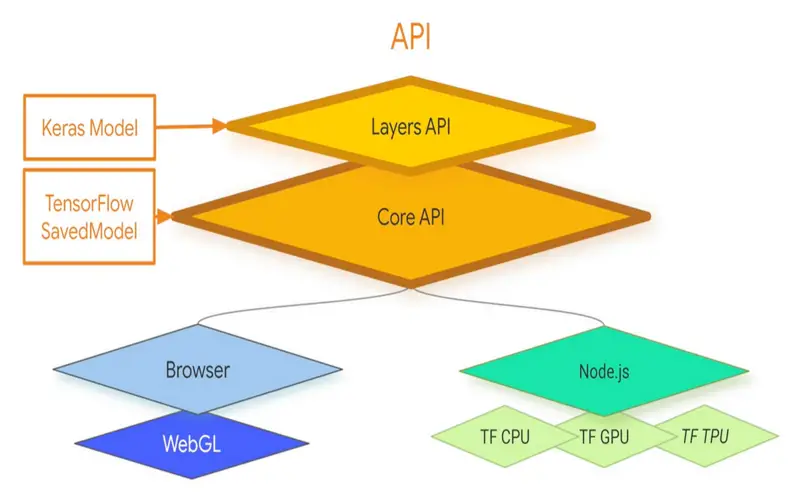

Expand a manufacturing ecosystem based on the Core framework, which simplifies model building, training, and export. TensorFlow assists in contributed training, instantaneously model iteration simply debugging with Keras, and much more. Devices like Model evaluations and Tensor board assist you track development and enhancement, through your model’s stages. To help you get started, search collections of pre-trained models at TensorFlow Hub from Google and the community, or execute innovative research models in the model garden.

4. Employ Models On-device, In The Browser, On-Prem, Or In The Cloud

TensorFlow offers demanding abilities to employ your models on any surrounding servers, edge devices, browsers, mobile, microcontrollers, CPUs, GPUs, and FPGAs. Tensor Flow serving can start ML ideal at a manufactured scale on the latest processors, in the world, involving Google’s custom Tensor processing Units. (TPUs). If you need to evaluate data close to its source to reduce latency and improve data privacy, the TensorFlow Lite structure allows you to start models on mobile devices, edge computing tools, and even microcontrollers, whereas the TensorFlow structure allows you to start machine learning with only a web browser.

5. Open-source Platform

It is an open-source platform that makes it accessible to all consumers around and ready for the development of any system on it.

6. Data Visualization

TensorFlow offers a way of visualizing data with its graphical reach. It offers simple node debugging with Tensor Board. It minimizes the effort of visiting the entire code and efficiently resolves the neural network.

7. Keras Friendly

Tensor Flow has a matching with Keras, allowing its users to code some high-level functionality parts in it. Keras offers system functionality to TensorFlow, such as pipelining, estimators, and eager implementation. The Keras functional API assists multiple topologies with distinct combinations of inputs, outputs, and formats.

8. Simple Model Manufacturing

Tensor Flow provides various stages of abstraction so you choose the right one for what you require. Create and train models using the pre-owned high-stage Keras API, which makes getting started with TensorFlow and machine learning straightforward.If you demand greater reliability, eager implementation allows for instant iteration and intuitive training on different hardware structures without altering the model description.

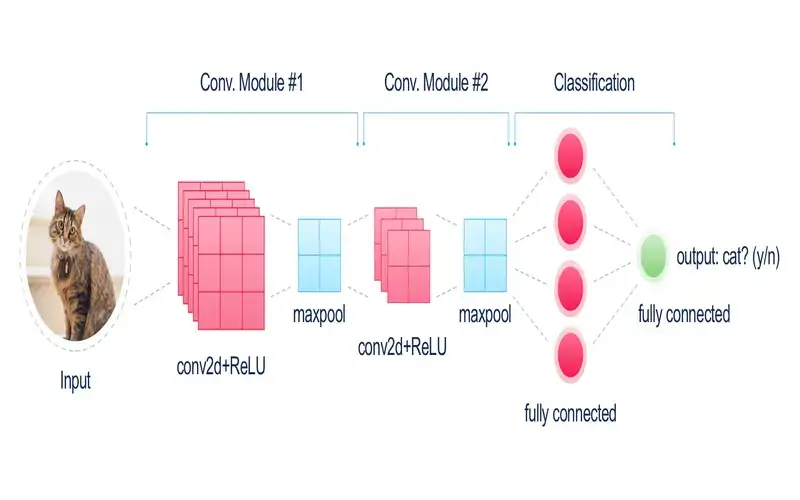

9. Image Recognition

TensorFlow can evaluate the data in the image. This image recognition is an innovation that has great energy. For instance, Google has developed a function that enables a machine to identify a picture of one animal and create it into a sentence. It is also pre-owned as an “automatic brake system” that applies the brakes instantly when it is likely to be hit by an image of the label or anything similar. Image identifies is also pre-owned for speed handle and management wheel operation. Learning the fake image of the label back in the net store, for example, has enabled the development of a system that analyses and alerts when familiar images are identified.

10. Authority Parallelism To Minimize Memory Use

With the parallelism of work models, TensorFlow is pre-owned as a particular hardware acceleration library. It rarely deploys distribution algorithms for GPU and CPU fields. Based on the customizing rule, consumers may implement their code on one of two structures. The system chooses a GPU id none is declared. This strategy removes memory allocation to some degree.