What Is Responsible AI?

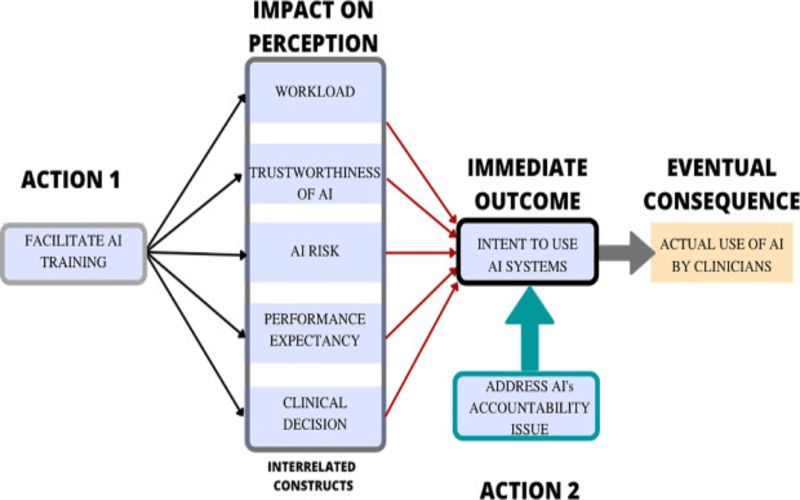

Responsible AI is the preparation of creating, developing, and deploying AI with good goals to allow employees and organizations to affect customers and the community, allowing businesses to generate trust and scale AI with confidence. It refers to AI systems that are transparent, unbiased, accountable, and follow ethical regulations. As AI systems become crueller and more pervasive, it is critical to ensure that they are improved responsibly while adhering to security and ethical requirements. Health, transportation, Network Management, and security are safety-critical AI applications where system failure can have a severe impact. Big rigs are aware that RAI is vital for mitigating technology risks. According to an MIT Solan/BCG mention that involved 1093 respondents, 54% of businesses lacked responsible AI professionals and brilliant.

Why Is Responsible AI Important?

Responsible AI is a still occurring area of AI governance. The phrase dependable is a safeguard term that encompasses both ethics and democracy. Frequently, the data sets used to instruct machine learning (ML) models are introduced partially into AI. It is created by either incomplete or corrupt data or by those training the ML model. When an AI system is warped, it can hurt on or harm humans, such as unfairly rejecting applications for corporate loans or, in healthcare, incorrectly diagnosing a clear.

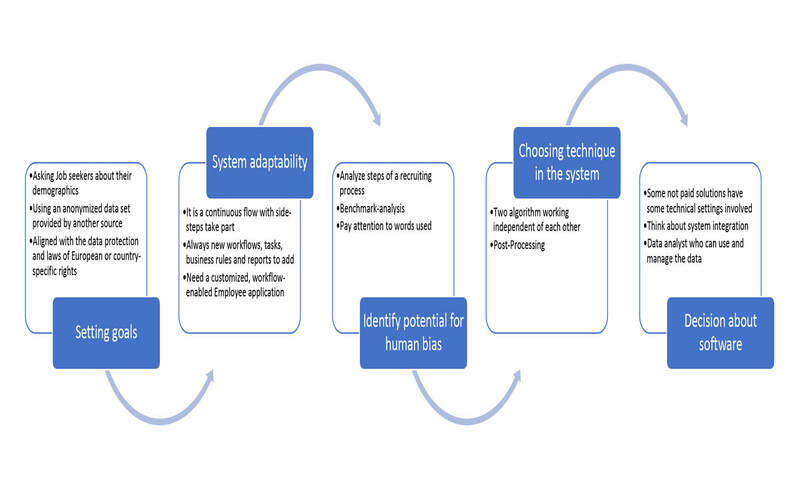

How Does Responsible AI Work

Responsible AI includes employing a blend of technical methodologies, ethical principles, and governance structures to contract the creation and execution of AI systems that manifest accountability, fairness, and alignment with human values. The responsible execution of AI accommodates various essential parts. The building of responsible artificial intelligence significantly affects the precise undertaking of data collection and processing. The significance of extensive and presentative datasets lacking biases and unfair patterns is enhanced. Data scientists and experts in artificial intelligence engage in accurate curation and approval of data to uphold equity and address the possibility of fairness that is increased by AI algorithms.

1. Reduced Unintended Bias

Build responsibility for your AI to ensure that the description and underlying data are as objective and representative as possible. Responsible AI minimizes bias in decision-making processes and establishes trust in AI systems. Minimizing bias in AI systems can offer a fair and impartial healthcare system and reduce AI-based business services, etc.

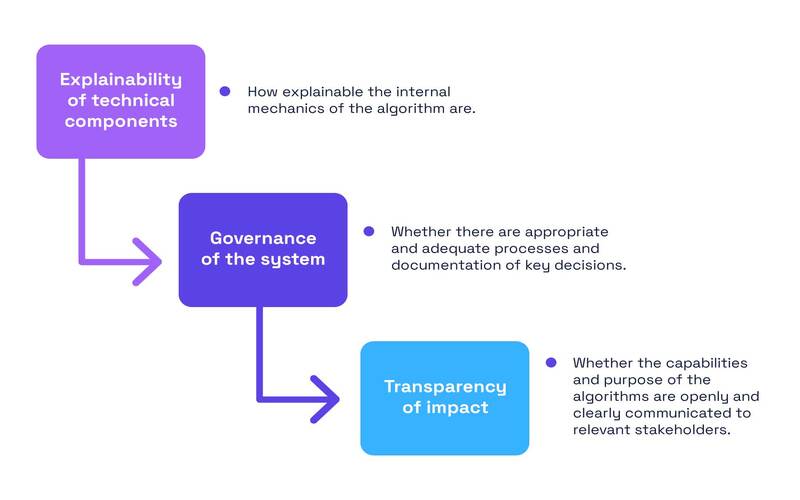

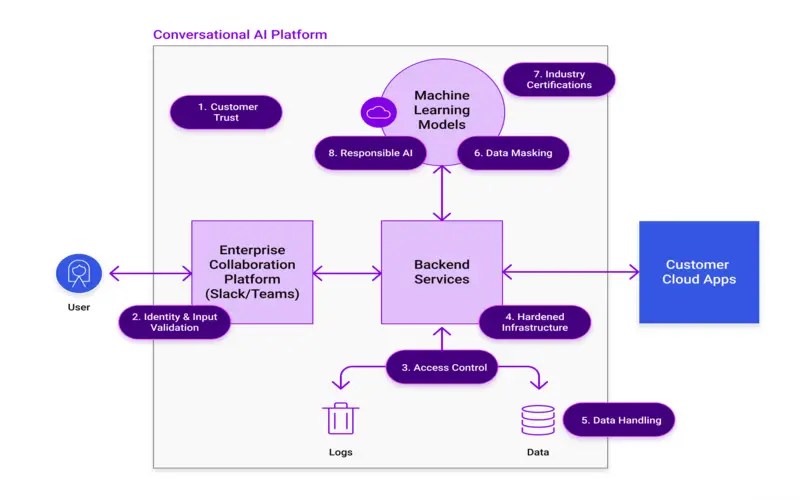

2. Ensure AI Transparency

To establish trust among workers and customers, develop definable AI that is transparent across procedures and functions. Responsible AI makes transparent AI applications that establish trust in the AI system. Transparent AI systems reduce the risk of issues and misuse. Improved transparency makes examining AI systems straightforward, wins stakeholders’ trust, and lead to accountable AI system.

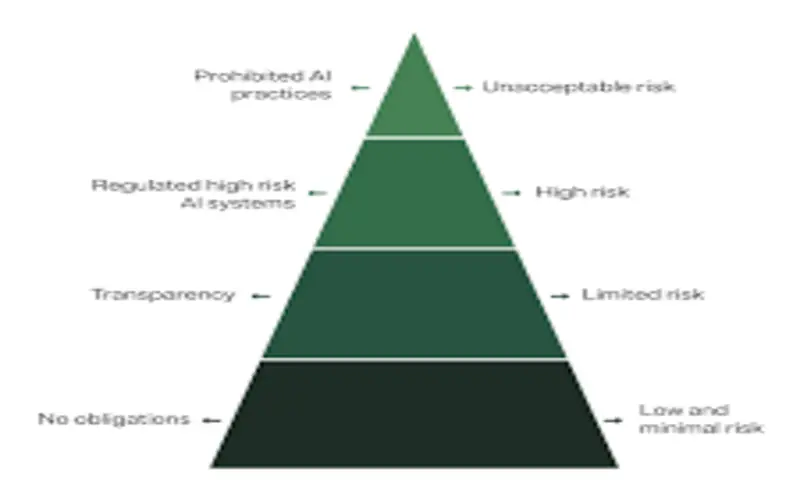

3. Ensures Compliance

Responsible AI promotes privacy and security, which can help organisations stay within the boundaries of the law when it comes to data collection, storage, and use. And with politicians, human rights management, and tech technologies calling for even more specific AI regulations, there are likely more rules to come. In 2022, the EU advanced a bill that would provide private citizens and businesses the right to act for business damages if they were harmed by an AI system, holding developers legally accountable for their AI models.

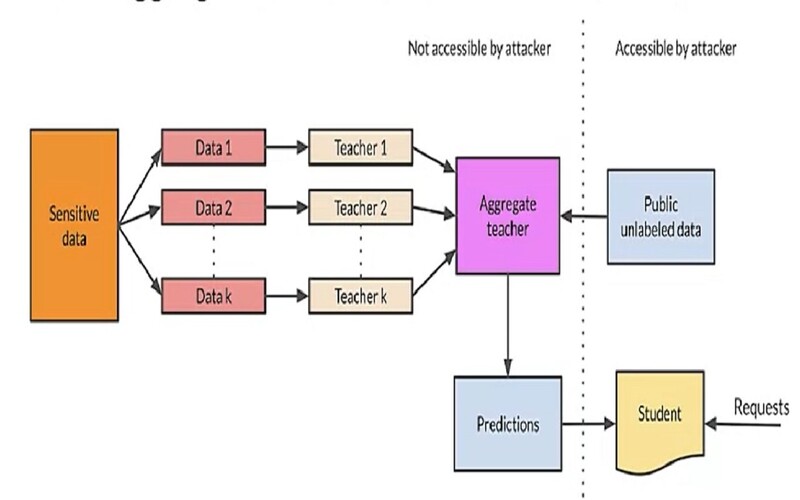

4. Protect The Privacy And Security Of Data

Authority privacy and security are reached to ensure personal and or delicate data is rarely used unethically. Security AI applications ensure data privacy, produce reliable and harmless output, and secure from cyber-attacks. Responsible AI principles have been developed by tech giants such as Microsoft and Google, which are at the forefront of building AI systems. Responsible AI ensures that AI technologies are not damaging to individuals or society. To protect the security of AI technologies, leaders, researchers, management, and legal control should constantly rewrite responsible AI creative.

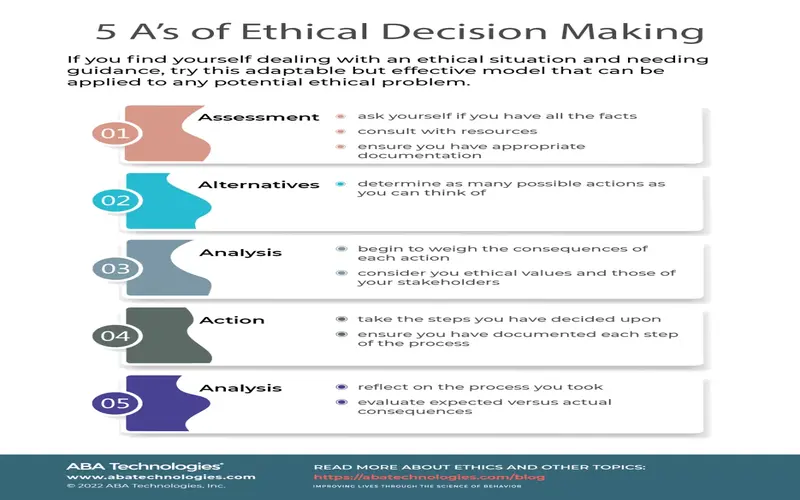

5. Ethical Decision-making

The theory of the decision-making systems is purposely patterned and programmed effectively and continuously to make decisions that adjust to ethical principles. AI systems can make decisions that are consistent with community values by combining principles such as justice, openness, privacy, and accountability.

6. Adoption And Trust

The combination of responsible AI methods allows management to cultivate trust among users, customers, and the general public. The building of magnified levels of trust among individuals results in an increased acceptance and employment of AI innovations. It benefits the complete realization of their abilities and enhances their advantages.

7. Better Security And Data Protection

Responsible AI prioritises the improvement of security and data protection by combining several safeguarding targets to avoid unauthorised access or misuse of personal data. The fundamental objective is to ensure that AI systems are programmers with a strong accent on privacy, thereby restricting the gathering and storage of data to what is essential for the intention, all the while safeguarding the privacy of individuals.

8. Surroundings And Social Impact

Responsible AI includes the conscientious evaluation of social and environmental consequences throughout the whole life cycle of AI systems, enclosing their development and combination phases. The fundamental objective is to ensure that AI innovations make a positive impact on the community, efficiently tackle societal challenges, and reduce adverse environmental effects.

9. Mitigation Of Risks

Reducing risks is a critical aspect of responsible AI, as it includes the active examination and management of potential risks that arise from the jobs of AI innovations. The evaluation considers possible unexpected effects and negative impacts, such as the migration of employment, inconsistency in economic distribution, and security loopholes. Responsible AI aims to ensure the enduring welfare of the community by recognizing and reducing the risks as mentioned earlier.

10. Eliminating Bias

Developing ethical AI can mitigate organizational bias among employees and minimize associated issues by ensuring that a sense of uniformity and perception is united in artificial intelligence innovations.