We have seen varying uses of wearable devices in the past few years. From tracking different parameters of the body to predicting sicknesses before the symptoms surface, the devices have expanded their roles in helping people with their day-to-day life.

A team of researchers from the Computer Science and Artificial Intelligence (CSAIL) Department of Massachusetts Institute of Technology (MIT), with the help of MIT’s Institute for Medical Engineering and Sciences, have developed a new Artificial Intelligence-enabled wearable device which can predict whether the ongoing conversation is happy, sad or neutral. The newly programmed device is intended to help those people who find it difficult to navigate their conversations.

The researcher team said that using the Artificial Intelligence technology to analyze the tone of a person to detect the mood of the conversation can pose as a potential boon for people who have anxiety disorders, autism spectrum disorders or Asperger’s.

The algorithm that the researchers developed is capable of identifying human emotions and the tone of their speech to understand whether the conversation that is happening has sad, happy or neutral content.

In a news release which was published on 1st February 2017, the researchers explained in details about the functions of the system they are working on. The algorithm can be loaded into any smartwatch or wearable device for its operation. The new technology was tested on volunteers who were wearing a Samsung Simband, a device which can capture accurate bodily parameters like body temperature, heart rate, and blood pressure, even movements of the limbs. The Samsung wearable devices captured the audio data and text transcriptions too to detect the narrator’s timbre, pitch, vocabulary, and energy.

As part of the research, 31 different conversations, each a few minutes long, were recorded and two algorithms were tested on them. The first algorithm was designed to ascertain the apparent nature of the conversation, for example, where the conversation was happy or sad. The system studied audio signals, biological data and text transcriptions to classify a conversation with up to around 83 percent precision.

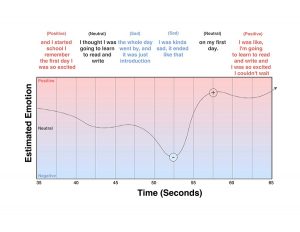

The second algorithm was aimed at understanding each five-second clip of the conversation and categorizing them as positive, negative or neutral. The algorithm linked long pauses and monotonous tones with sadness, whereas varying and energetic tones confirmed happier conversations. The accuracy shown by the second algorithm was 18 percent above the average.

The researchers believe that the new technology will be able to showcase enhanced performance if it is experimented on complex situations where several people, wearing smart watches, are participating in a conversation, thereby creating more data to be analyzed by the Artificial Intelligence System. Maintaining confidentiality of the user’s personal information has been a matter of serious concern for the developers of the new system, which was why they chose to run the algorithm locally on the user’s wearable device.

In most of the researches performed earlier, the participants were shown happy or sad videos or were asked to act out different emotional states. But in the case of this study, the scientists made an effort to bring out the true organic feelings of the speaker by asking them to describe a happy or sad story of their lives.

The algorithm is still in its early developing stage, and the end product is not yet reliable enough to be used by the mass. Alhanai, one of the co-authors of the research work believes that in the recent future they will be able to collect data on a larger scale by putting into use commercial devices like an Apple Watch which will make the technology available to the rest of the world.

The study was approved for a presentation at AAAI Conference (Association for the Advancement of Artificial Intelligence) in San Francisco.