What is Cloud Computing?

A Detailed Description of Cloud Computing

1: Cloud Computing – what lies beneath?

The Oracle says that it is as inexorable as the day after tomorrow. But clear away the jargon, and you will find a business model that can’t seem to tear itself away from the blueprint. What is stopping it?

1.1.: Introduction

Suppose you’re a tech-savvy business owner. You’ve got dozens of employees milling about in your office and it’s time to bring them up to the Internet age. Shouldn’t be too difficult, right? Everybody is doing it and you’ve got a thriving cash-flow to boot.

But buying computers is the least of your problems. Having wired them all up, you now have to purchase software suites and license them accordingly. If your IT needs are resource-intensive (say, bandwidth- or data storage-wise), or if you are planning to expand your business, a costly upgrade is in order. Where are you going to put the next server rack?

Meanwhile, when you’re off work, you pull the latest gadget out of your pocket and surf the web. While you may have paid a small fortune for this technological marvel, it’s a bit of a shirker. With little fanfare, it delegates most of its computational needs to a “cloud”, whatever and wherever it is. The grunt work of crunching numbers, storing photos and interacting with the wider web is all done elsewhere. Your phone simply displays the results and takes the credit.

These two seemingly unrelated developments are really two sides of the same coin and nobody has figured out just how big it is. With the advent of near-global internet coverage, companies are now scrambling to provide cloud services. It might be done through a standard web browser or similar straightforward local client software. Every command is run remotely by large server farms which handle a huge number of customers simultaneously. The provider doesn’t care whether yours is a one-person firm or an enormously popular website.

Chances are you’ll have already encountered cloud computing, albeit in a personal setting. As far as businesses are concerned, it remains an on-going development. Despite its huge potential (and admittedly wide-spread application), cloud computing has yet to precipitate a stampede from data-centers. Most enterprises restrict it to non-critical or low-security applications due to data protection concerns. Advocates of open software standards dismiss the hype as a re-branding campaign for existing propriety systems. Take-up rates are growing steadily, but so are controversies surrounding it.

Underneath all the hoopla is the latest step in a millennia-old tradition of technological outsourcing. Like indoor heating and electricity in the last century, IT resources are shifting slowly but steadily away from on-premises production. Users can now access anything from remote storage space to developers’ application platforms on demand through the Internet. Physical infrastructure has been spirited away.

For the majority of end users, this isn’t really news. Web applications have been around for years, as have utility computing (metered rates for software-as-a-service) and grid/distributed computing. It is the developers who can really take advantage of this development: cloud architecture is innately scalable, reliable and built around multi-tenancy.

1.2.: Overview

Where do we begin amidst all these clouded definitions? The one characteristic we can all agree on is its rootedness in the Internet. Resources, software and information are shared amongst terminal devices on demand.

While this sounds less than exciting – what else is the Internet good for? – We might want to compare this shift with the early 80’s emphasis on mainframes. The client-server model has taken over both operation and control of technological infrastructure from the user. Broadly put, CC is a new delivery model for IT services, and a new consumption paradigm for its users.

The average business end-user will most likely first encounter CC via “online applications/web services”, such as those from Sales force, Amazon and Google. Both software and data are stored on servers and so equally accessible from any web browser. Providers now generally offer quality-of-service standards in service level agreements. But if you are an IT professional, say a system admin or a developer, the possibilities are virtually endless – read on.

1.2.1.: Characteristics

Let’s clear a few things up first. For example, there is no such thing as a single “Cloud”. Like most other things on the Internet, monopoly is hard to come by. Various operators are scrambling to offer their services, and in the absence of open standards, interoperability remains a distant dream. There are many different forms of CC, including infrastructure-as-a-service (IaaS), platform-as-a-service (PaaS) and software-as-a-service (SaaS), which cater to different computing aspects and come with different benefits of Cloud Computing and its risks. We’ll take a look at each in turn in the next section.

For the moment it is helpful to divide a CC system into two parts: the front end and the back end, wired together through the Internet. Users access the former, and the latter sits in the cloud. A crude analogy might be a minuscule shop front and an impossibly large warehouse, joined by a freight line running at lightning speed.

Shops like convenience stores are quite standardized, and you don’t need forklifts or pallets. Similarly, some services like web-based emails can be comfortably run on standard web browsers like Fire fox. But if you’re selling specialized or bulky goods, you might want to build a loading bay at the back of the shop: these are specialized client software.

Inventories is a tricky business: it’s never the right size. Expansion is costly, but so is wasted space, and if you over-stack things it might all crumble down. The same happens to servers (remote computers that cater to your needs): they are hugely expensive, seldom run at full capacity, but when it’s running peak loads, it tends to crash.

So why don’t we pool some money together and rent a really big warehouse? The logistics involved might be too cumbersome in the physical world, but not in the virtual one. A server can be engineered into a form of schizophrenia, thinking it’s two or three or thousands of servers at once. Virtualization, as the technique is called, can obliterate the whole inventory issue if it is implemented in a big scale. If you’re sharing space with thousands of users at the same time, it doesn’t matter if you’ve doubled your needs in the span of a day. Overall server load has only increased by a negligible amount.

(This illustrates the beauty of the law of large numbers: even if we deal with random events, such as your demands for IT demands, as long as we’ve got enough of them pooled together, they will peter out to an expected trend. The possibility of (virtually) unlimited computational expansion is more monumental than it seems, because scalability, as we will see later, can lead to completely new ways of IT operations.)

With our new arrangement, we have the two ends and perhaps an extra coordinating server located off-premises. You, the customer, now own nothing but your computer and an internet connection. All you’re shelling out for IT resources is a pay-as-you-go bill, rather like those for utilities. Unburdened by all the engineering challenges, and awash with unlimited capacity, you are going to have a blast finding new ways to use them up.

All this of course rests on the assumption that you are a SME owner saddled with little bureaucracy and less legacy servers. If you aren’t one, this transition might prove a bit more difficult.

1.2.2.: Key features

- Flexibility: CC lets users re-provision resources speedily. Software upgrading is now committed centrally and remotely, to the extent that even feature roll-outs might go unnoticed.

- Customizability: everything from a standard word processor to a tailor-made sales management suite is available.

- Metered cost: Capital expenditure, the biggest barrier to IT expansion, is now converted into an operational one. Centralization lowers both real estate, software licensing and maintenance costs. As for the computers you actually own, they no longer require the speediest processors, an extra hard drive and the IT personnel to go with it, you just need what it takes to browse the web.

- Independence: With web-based access, it doesn’t matter which computer you are using, local or abroad (even mobile devices might do the job): both the software and the data will be there. With the server racks gone, relocation also becomes a lot cheaper and more flexible.

- Multi-tenancy: With pooled users come higher utilization rates and peak-load capacity.

- Distributed/parallel computing with dynamic scalability: CC is often run on an enormous group of interconnected computers called grids. Additionally, programs specifically written for this infrastructure can handle some computationally-intensive demands in parallel (all at once) instead of serially (one at a time), alleviating a common bottle-neck in the process. Furthermore, the cloud can monitor and provision resources on a near real-time basis.

- Reliability and redundancy: Like RAID technology (Redundant array of independent disks), clouds are impervious to individual server crashes. However, this doesn’t mean that CC is adamantine – far from it.

- Security: Where customers cannot afford or bother to implement high-level security measures, CC providers can and will. Note, though, that the complexity of the problem increases as well, so customers used to sorting out their secrets in-house might think twice before handing it over to a black box operation.

1.2.3.: What CC is (and isn’t)

1.2.3.: 1) Software-as-a-Service

As the name suggest, SaaS is a targeted form of CC where a single application is run entirely in the cloud (i. e. the service provider’s servers) and delivered through simple clients like a browser. Sales force is by far the most well-known of them all, but human resources applications and office suites are also cropping up by the dozen (Workday, Zoho Office). The customer doesn’t need to invest in anything and the provider maintains just one application for its many tenants. SaaS is primarily targeted for the end-user.

1.2.3.: 2) Attached web services

Attached web services come in two forms. The first one, closely related to SaaS, offers additional functionalities rather than full-blown applications. Application programming interfaces (APIs) such as geo-information, payroll processing, and transaction processing services are made available for the developer, who can then attach them to particular on-premises application. Microsoft’s Exchange Hosted Services, for instance, provides spam-filtering and archiving to an Exchange server owned by the customer. Alternatively, providers like Xignite can offer discrete business services. Managed security services, such as those offered by IBM, Verizon and Secureworks have been around for decades.

A good analogy is Apple’s iTunes. While it functions perfectly well as a stand-alone application, playing and organizing local music files, the attached iStore makes internet content purchases possible as well.

1.2.3.: 3) Platform-as-a-service

PaaS could be seen as the logical conclusion of CC development. A dedicated developer, either working for a CC provider or consumer, can build applications directly on cloud platforms. They can dispense with building infrastructure and a custom foundation altogether. While there are still design constraints when compared with a start-from-scratch approach, the final tailor-made solution can still enjoy the pre-integration, reliability and scalability of CC.

1.2.3.: 4) What CC isn’t

While the delineation above might serve as a rough guide, the intricacy of CC defies strict categorizations.

Utility computing – As previously mentioned, utility computing is a feature (or rather, a subset) of the CC model, and it’s been around for decades. IBM, Sun and Amazon all offer resources on demand. They have grown from a handy supplement to a replacement for data centers. LiquidQ, for instance, tried to go beyond storage offers, letting customers pool memory, I/O and processing capacity over the network as well. Unfortunately, since it couldn’t identify its core market, funds dried up after a seven-year run.

Virtualization – a pre-requisite of CC. The creation of “logical/virtual” servers within themselves – essentially the computational equivalent of multiple personality – is now commonplace. But in order to make full use of virtualization, these servers must be resourceful enough to expand or diminish capacity as the user sees fit. To do this, they must first have enough capacity in the first place and also have the infrastructure to allocate them automatically. This brings us to:

Autonomic computing – essentially a self-managing system. A properly configured CC fulfills some aspects of autonomy, such as dynamic scalability (near real-time capacity adjustment without needing peak load engineering). However, this set-up is also possible in self-contained systems.

Grid/parallel/distributed computing- Parallel computing is an attempt to tackle computation by splitting the problem into smaller tasks which can be handled separately and simultaneously. This compares with traditional serial computing, where you “begin at the beginning” and work through the steps sequentially. Different processors share a pool of memory in the process. In distributed computing, a similar phenomenon occurs. But now each processor gets its own memory and becomes a standalone computer. Only the problems and the results pass through the loosely coupled computers. If this scenario is extended on a vast scale, we have a grid – a virtual supercomputer made of a cluster of networked computers.

(While all CCs make use of multiple networked computers, they are not necessarily designed for parallel computing. Only those designed with scientific research or gargantuan number-crunching tasks in mind are likely to implement this infrastructure.)

Peer to peer (P2P) – While the mental image strikes a chord, P2P is a rather different matter. It is a distributed and autonomic network architecture where every user is engineered into a position to supply as well as consume resources such as data and bandwidth. P2P differs with the established client-server model, which is the norm in CC.

1.2.4.: Example: Cloud Storage

Before delving into the technicalities of CC, let us take a look at an example. The simplest and most pervasive form of CC in the last few years has been cloud storage.

To a computerized firm, storage is as essential as electricity. A small shop might have a database for customers that could use frequent off-premises backup; a digital printing shop could easily fill their system with proofs.

In cloud storage, data is siphoned off by the user into multiple servers. Uploading may be done through a simple web client (SaaS) or an optional subscription service that comes with security software suites (attached services). If the firm is large enough, IT might even opt to create a backup server from scratch on the cloud platform.

The operator hosts a large number of interconnected servers, which in turn run many virtual servers for its many tenants. There are two things to co-ordinate: redundancy and scalability.

- Most cloud storage firms make at least two copies of every document they receive, in case parts of their server crash (resulting in downtime, a period when users are denied of access to their resources), or even a catastrophic data loss. This process is called redundancy.

- Demands for storage can fluctuate wildly: the aforementioned printer might have hundreds of gigabytes of graphical files in one week and a few megabytes of textual ones in the next. The cloud allocates (and bills) it for space accordingly, distributing the load across different servers.

The above has been implemented by Amazon in its S3 (Simple Storage Service) offer since 2006. It charges users USD0.15/GB/month, as well as bandwidth and per-request fees. Wholly scalable and with 99.9% monthly uptime guaranteed (which translates to 40 minutes downtime per month), it handles more than 100 billion objects as of March 2010.

1.3.: Economics

As has been repeated over and over again, CC users get away with hefty capital expenditure. Consumption is instead billed either by utility (the amount of resources consumed) or as subscription (by the length of time, in effect). Also widely advertised is the assumption that a shared infrastructure and standardized application would lead to lower operating expenses.

1.3.1.: Costs

The last point does not necessarily translate to savings, however. Depending on the circumstances, the cloud model might not make fiscal sense. For instance, an organization with limited IT demands piggy-backing on existing infrastructure is fine on its own. Other firms with flexible capital budgets and stringent operating ones may decide to go retro after all. Since a data Centre that has already been endlessly fine-tuned has to brace itself for a shock when migrating, the risks will complicate a potential user’s benefits of cloud computing and cost analysis.

Beware of hidden costs: some cloud hosts are known to charge extra for, or auction off the following:

- High memory and/or processor usage;

- Bi-directional data transfer (the amount of data that gets accessed);

- Bandwidth (the speed at which the data is transferred);

- Data storage (the size of data stored remotely);

- PUT and GET requests (by count);

- Input/output (I/O) requests;

- Static IP addresses;

- Load balancing.

When all costs are factored in, some in-house datacenters are more likely to cost less than cloud equivalents, especially if they are running proprietary platforms to satiate fixed demands with no need for scalability. Commercial software vendors could charge significantly more for cloud deployment than on-premises installation.

If your firm (or more likely your government) has an incentive to go green, CC might become even a bigger headache. Depending on the location of your physical server farms, you might save on cooling and carbon footprint but fork out on renewable energy (This, coincidentally, is why Nordic countries are desperately trying to lure CC providers with their Arctic climate and geothermal vents.)

There is always a nagging concern that cloud providers could simply fold. In a number of cases they have, due to financial or legal reasons. The conscientious ones might grant a buffer period for customers to salvage their data, but if they don’t, you might want to back up your backup.

1.3.2.: Business compatibility

Now suppose for a moment that money is no objection. Your budget might be quite large relative to your needs or more likely you have a pressing priority to expand before the whole house of cards collapse – say, a short-term web-based marketing campaign that would surely paralyze your on-premises web server. Is CC the answer then?

Yes, if you aren’t trying to run a business. Though many aspects are pre-configured and pre-integrated, the complexities of enterprise software still precludes an IKEA “screw yourself” approach. There is no relief from monitoring tasks: redundancy, reliability, performance, and latency are all factors that are precariously balanced against one another.

The campaign server, for example, has to comply with security standards and be compatible with your pre-existing database software. Even if it resides in the cloud, it is still very much your server, and is thus accountable to privacy laws with regards to customer information. Any data collected then needs to go through your tracking system.

Even if you are going with IaaS, you might be hard-pressed to find a cloud that suits even your basic needs. A moderately-sized firm with a global presence often requires load balancing across datacenters, so that requests don’t stack up in one of them while other ones are underutilized. Many CC providers can do this, but only with a small delay. If you need an instantaneous (low-latency) response, you have to wire them up yourselves. A similar issue crops up with clustered servers: their appetite for high-bandwidth communication amongst themselves are not always satiated in CC environments.

Providers are now racing to provide these features as industry standards rise. The hottest tickets are currently load-balancing, as described above, and auto-scaling. However, other necessities such as configuration and life-cycle management are often still amiss.

In the long run, CC might indeed lighten the workload and go easy on your budget. But it will only come about after a comprehensive review of your needs (application, security, and uptime requirements), an examination of the CC models that suit you, and prolonged monitoring. There are many more barriers to migration, to which we’ll return later.

1.3.3.: A marketing hype?

We seem to have established CC as a whole that is more than the sum of its parts and rather generic parts at that. Both the technology itself and its emergent phenomena are widely studied and applied. But this gradual, far-sighted shift in thinking has been heralded as the next big thing, a transformational inevitability as urgently ground-breaking as transistors and sliced bread.

These are legitimate grounds for skepticism. Y2K (and more importantly the hardware upgrade it demands) was similarly “inevitable”, but many of us muddled through. There is no doubt that an orchestrated marketing campaign for CC is under way, but what the product actually entails is a subject of some contention. In particular, the market is dominated by two kinds of providers: locked proprietary systems makers (IBM, Oracle), and the internet feudal lords (Google, Amazon). The former provides what they have always provided – attached services, software, and remote storage – in new bundles. The latter leases out the spare tools they happen to have lying around: server space, platforms, and its global network.

Of course, there are firms pumping real resources into CC and coming up with real innovations. But we have to bear in mind how easy it is to “change the wordings on some of our ads” for “everything we already do”, as Larry Ellison, Oracle’s CEO, so eloquently put.

1.4.: History

As with any broad terminology, it helps to put CC in perspective by going through its history.

The organization of computation as a public utility goes back to the sixties, when comparison to the electricity market was first made by Douglas Parkhill. Even though networked computing was still in its nascent form, his book painted an accurate picture of CC economics. Elastic and seemingly infinite supply, on-the-spot consumption, and the differentiation of public and private usage was all envisioned before Page and Brin were even born.

As the deployment of mainframe computers gathered pace, telecommunications companies began offering dedicated point-to-point connections. This mode of network served well for the next thirty years, since mainframes were the device of choice for business computation and besides there was no such thing as a personal computer that weighed under a tone.

The explosion that came in the nineties changed it all. Point-to-point circuits became infeasible because they were both costly to install and underutilized, just as a telephony network that required individual wiring to each number would. Virtual Private Networks (VPN), which comprises a new client-side protocol and a clearing-house at the telecoms side, became an economical option. The cloud demarcates responsibility: each customer tends to his or her terminal at the spoke, and the cloud in the middle, wires, servers and all, is taken care of.

It was Amazon, the online retailing giant, which first nudged CC towards realization. After the dot-com bubble, it was left with a voluminous network in which most capacity was reserved for the occasional spike in demand. Cloud architecture made use of resources more rationally, and having implemented it for in-house use, the firm proceeded to sell it off to customers through its Amazon Web Services (AWS) in 2006, thus followed a similar spate of attempts by academic, commercial and open source rivals.

1.5.: Architecture

In this section, we will take a closer look at the cogs and wheels that run a CC system.

First of all, we take a look at architecture. By this term we mean the basis of software systems that deliver CC. In it, many different components, each excelling at one specific task, talk with one another through an interface (API). This approach packs up complex jobs into more manageable and extensible components than if it were all built in one big piece.

The basis is divided into two big categories: the front end, which is exposed to the end-user, and the back-end, which is in the cloud. The front-end includes the user’s computer and network as well as the applications needed to access the cloud (say, a browser), and the back-end is a network of servers and storage space.

Let us look at CC from the developer’s point of view. We will begin with platforms, the barest and most flexible of arenas in which we draw our solutions.

1.5.1.: What is a Platform?

What is Cloud Computing? The advantage of writing applications that run in a cloud, or using services provided from it? To properly understand the advent of cloud platforms, we need to see it in light of existing ones.

Usually, when a team writes an on-premises application, it is hardly built from scratch despite what they like to say. There will be an operating system which underlies the application’s execution and I/O interaction, and other computers might handle jobs such as storage. Without these basics, it is unlikely that anything would be worth building at all. Cloud platform is simply an extension of this logic.

But back to platforms as a whole: Briefly, it is made up of the following components in a hierarchy:

- A foundation: this may be the base operating system, perhaps with standard libraries and local storage.

- Infrastructure services: a modern system delegates different basic tasks to different computers (this is called a distributed environment). Remote storage, integration and identity services are usually provided.

- Application services: applications are written for the end user, but their functions are sometimes accessible to new applications as well. Where available, they are part of the platform as a whole.

In CC, a similar assignment is made amongst platform components. But while the objectives are the same, they are often met by totally different means.

1.5.1.1.: Foundation

An operating system is an interface for applications: the latter, armed with instructions from the user as interpreted by its programming, has to tell the hardware to pull its weight through the former. Amazon’s Elastic Compute Cloud (EC2) is one service that provides an OS in the cloud. (Strictly speaking, it runs a virtual machine, but as far as the developer is concerned, it provides a Linux instance.) There are very less limitations because the service is so basic. The user can use whichever local support that works the best, mixing different architectures, or even create multiple Linux instances so that computationally intensive tasks can be distributed in parallel.

But EC2 is an exception that proves the rule. In majority of leading CC foundation providers, local support is confined to specific options. This is a trade-off designed to optimize an environment for the most popular kinds of applications. Because every user is using a standardized set of local support, such as runtime, data storage, and perhaps a customized programming language, the cloud can manage scalability better in the face of huge loads. Google, for instance, specializes in web applications written in Python, while Force.com, a subsidiary of Salesforce, targets at business data storage implemented in their proprietary language Apex. Microsoft is developing its own CC platform that will support its own .NET framework, so that both applications and IT expertise will find it easier to cross the line between on-premises and CC deployment.

1.5.1.2.: Infrastructure

Some limited applications can thrive on nothing more than the foundation described above. But upon reaching a certain size, they might get benefits from a division of labour with regards to the more elementary operations: storage, identity and integration. These infrastructure services are frequently provided in-house, but CC foundations are beginning to offer them as the number of cloud applications grow.

- Storage

Most applications need some local storage, which is an essential component in both on-premises and CC foundation. But as we have seen, remote storage is rapidly gaining pace as a supplementary resource for reasons of speed, redundancy, and costs. There are many different kinds of remote storage available, of which Amazon’s Simple Storage Service (S3) is the most bare-boned and straightforward. It is an unstructured model much like an unpartitioned warehouse full of “buckets”. A user’s data is simply balled up into an “object” and chucked into a bucket, which can be retrieved at will.

There is one caveat, however: these objects cannot be edited, so if you happen to have a big file which requires frequent appending, the whole object must be replaced every time. Similarly, if you want to query a single entry in a large database, the whole file needs to be retrieved. While this method is easily scalable, developers would have to tweak their applications in order to make time and cost economies.

SQL Azure, formerly known as SQL Server Data Services (SSDS), provides a more structured solution at the expense of simplicity and cost. Its equivalent of the bucket (a container) is more selective: it only holds objects (entities) with a fixed set of components (properties). An application can ask SQL Azure to look into a bucket for objects with certain components. For example, we have a bucket called “sales invoices”, and each object is a separate invoice, comprising a date, a sum, and the item sold. The application would then ask SQL Azure to look for an invoice with a certain date.

The caveat here is that SSDS doesn’t actually use the SQL query language, nor is it a relational database as its namesake is. The reason for a simplified approach is scalability again. Amazon’s competing “shelved warehouse” uses a very similar method as well.

- Integration

The UNIX programming philosophy is a sign of the times – “do one thing and do it well”. So it follows that applications have to communicate with one another. Depending on the amount of information exchanged, on-premises infrastructure services might range from a simple message queue to dedicated integration servers. It is the difference between a pin-up board and a telephone exchange.

But when these services migrate to the cloud, subtle changes are afoot. For instance, the message queue as provided by Amazon’s Simple Queue Service does what it says on the tin: exchange messages in the cloud. But whereas the traditional queue works like a broadcasting service, delivering each message in the order it comes in and for exactly once, SQS works like SMS: the service will do its best to deliver, but it might be in the wrong order or it might come in duplicates. One can imagine the havoc if an instruction were programmed in the form of a food recipe, which would have been the norm in on-premises queues.

Another area where CC and traditional infrastructure differs is that of firewalls. Formerly, a business can get along with a simple barrier between the internal network and the Internet. But now, since applications might be run in different organizations, firewalls must be furnished with suitable gates. A relay service such as those provided by BizTalk might take the place of message queues.

- Identity

Any corporate animal would be familiar with the login screen. It is just one of many implementations of digital identity, the basis of which is as widespread (emails) as it is deep (from users downwards to applications and services). Depending on the key held, a system assigns permission to do this thing and read that file. The login screen, for example, grants access through the Windows Active Directory on-premises. But what about the cloud?

At the moment, identity services are wholly tied in with the CC provider. If you are accessing resources from Microsoft, you have to use its Windows Live ID and a Google account if you are using AppEngine. There is yet no application-specific implementation of digital identity, but that could change when open source implementations such as Eucalyptus gain a foot-hold. The current situation is vulnerable to privacy and security concerns, as we will see later on.

1.5.1.3.: Application

Finally, application services: we are closing in on the holy grail of human interaction. While infrastructure services are designed to serve applications with its low-level all-purpose operations, application services are designed with people in mind. Human beings have a habit of requesting specific, high-level tasks. So the former has to cater to the needs of every sort of application, whereas the latter is designed to tackle just a few, but in minute detail.

- SaaS

Now-a-days most of the firms run on a mixture of both off-the-rack software suites and tailored solutions. Users can access the system remotely, perhaps by a Virtual Private Network tunneled through a Secure Shell. From this perspective, the two kinds of software blend into one on-premises platform.

Substitute a cloud for your premises, and we have SaaS in a nutshell. Initially, as enterprises migrate to a CC application such as Salesforce’s CRM suite, they will also come across a number of services that can be used by their own on-premises application. After a period of mingling, users might decide to deploy their own home-grown applications in the cloud as well. These in turn would provide their own services which can be integrated with other applications, by virtue of interoperability requirements built into the cloud infrastructure. Eventually they all mix into a big melting pot of functionality.

- Search

Apart from specialized business software, other cloud application services might also come in handy, but in unexpected ways. Search engines, for instance, cater to individual human queries magnificently. They can easily extend their service to cloud applications as well.

Often you will see corporate websites offering a search function that includes both their internal database and that of the WWW. It is incomparably easier to query within a company than to extend it throughout the world: the web crawling and indexing it requires cannot possibly be justified by the minor convenience. So the application does the next best thing: it submits a duplicate query to an established engine, such as Google or Bing. The two results are then mashed up into a single output for the user, who most likely didn’t notice anything.

- Geographical Information System (GIS)

This mode of operation extends to maps as well. Many firms could use one, from retailers providing locations of your nearest outlet to logistics firms plotting the shortest routes and keeping track of their vehicles. Before CC, there were only two options available: either resort to low-level graphical interface or develop/purchase a mapping database from scratch.

Hence Google Maps: a web developer simply instructs the application to retrieve information from the Google cloud, customizes it as he or she sees fit, and publishes it directly to users. It is a symbiotic relationship: the firm naturally dispenses with the cost and hassle of delivering a whole GIS. But it is Google that benefits most from the transaction.

Having deployed such an immensely scalable platform, the search engine can take in as many users as it sees fit. And every user contributes to the system: the petrol station store locator application, for example, has now entered the location of all its outlets in Google’s cloud. While the data cannot be resold per se, the wealth of information makes Maps an alluring prospect for advertisement. Now that drivers are checking this information regularly, car mechanics might want to make use of Maps as well. The cycle continues.

- Other

If a person can access a web service, an application almost certainly can as well. (Of course, some websites might not like it. Hence the proliferation of CAPTCHA – fuzzy numbers and letters that a website asks you to type in, so that they know you’re a human being and not a piece of code.)

Websites are embracing this change. Web photo galleries, online contact database and office document systems are all rushing to expose their APIs, so that their functionalities can be accessed by other applications. This generosity is prompted by the possibility of synergy, where two web applications, each utilizing the other’s specialty, prove to hit the right spot for users. A phone book is a dime a dozen, as is VoIP (internet telephony) software. But what if the two could talk to each other in the background? If properly implemented in a mobile phone platform, they could demolish the international telecoms market in tandem.

The big players are trying very hard to make this a reality. Every web service under Google is accessible through its data API, as is everything under Microsoft, under its Application-Based Storage.

If it seems a bit confusing as to where infrastructure services end and application services begin, it is just as well. Buckets of data is an infrastructure service (every application can use it), and a photo gallery isn’t one (only applications that make use of photos for display in web page do). But what about cross-application alerts or identity services? There is no clear-cut delineation or rigidly defined boundaries to be sought here, because the developments are still quite nascent.

In the next section, let us take a look at some specific examples of the different layers that make up CC. We will go all the way to the front end, where human users reside.

1.5.2.: Examples

1.5.2.1.: Infrastructure

Infrastructure-as-a-service, as the general public understands it, is really more about the CC foundations as we have previously described. As the lowest common denominator in the hierarchy, it denotes such resources as the physical hardware, networking, and a basic operating system. This business models stems from virtual private server offerings in the late nineties. These servers are usually furnished with CC in mind, so expect parallel computing, scalable systems, and possibly a degree of infrastructure service integration.

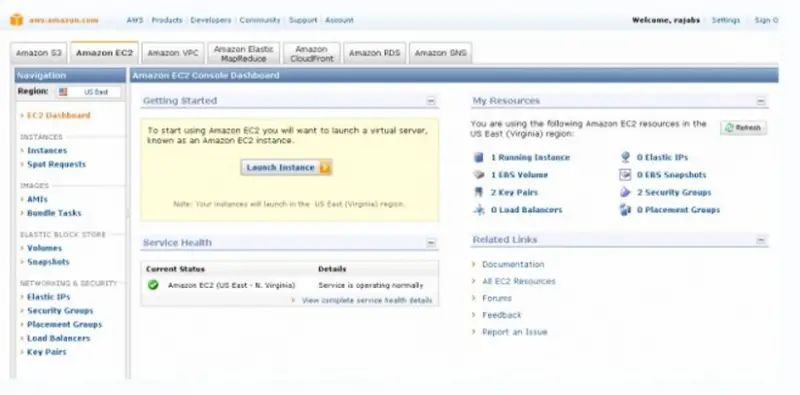

As described in the previous section, Amazon is probably the leading provider of such services through its Elastic Compute Cloud (EC2). The word elastic describes the way a user can create, run and shut down virtual machines as he or she sees fit, so as to get the most out of the hourly subscription. There is also a degree of geographical flexibility involved, which helps with latency and redundancy issues.

We have discussed the need of load balancing in the event of high demands across multiple servers, or a desire for more responsiveness. In EC2, users can specify that the virtual machines (instances, as they are called) be run in certain geographical locations (subject, naturally, to Amazon’s availability).

First, consider the gain to speed. If your instance caters mostly to Europe and America, but needs to be very responsive to requests from either side of the Atlantic, why not split it up into two mirror images and place them accordingly? They can now serve the majority of requests locally while maintaining the ability to pool their capacity at peak hours.

Or think about the possibilities of force majored. A server in California is exposed to a risk of rolling brownouts: even if the farm itself is insulated by an Uninterrupted Power Supply, its internet service provider could still face bottlenecks. Meanwhile, earthquakes have, on occasion, severed transoceanic fiber optics: the last time this happened, the whole of East Asia was virtually isolated from the rest of the online community for weeks. A suitably located instance, configured with redundancy (mutually backing each other up) might spell the difference between hassle and doom.

1.5.2.2.: Application

Now that we have drawn a muddled distinction between application services and applications, a few illustrations might help. Essentially, we’re looking for something that fits the following criteria:

- Hassle-free installation or on-the-fly application (through browsers and embedded plug-ins in a web page)

- Little or no maintenance (upgrades, software patches and even new features are rolled out behind the scene)

- All the hard work done elsewhere (actual computation, storage, and operations with the wider web)

- One provider serving many clients simultaneously (even if the client is unaware of it)

One popular and free web application which ticks all these boxes is Google Docs. It was originally developed by a small firm, and the entire team and product were bought outright. Equipped with an extensive server farm which spans the entire globe and is arguably the most powerful supercomputer in existence, Google then implemented the office suite on a larger scale. Word processing, spreadsheet, database and data storage services, all previously part of Microsoft Office’s unassailable turf, are made available to anyone possessing a Google account. Nothing needs to be downloaded: a document can be created and edited directly through the browser interface, which resembles a hyped-up, rich-text version of its email client.

This, in fact, is a natural development for the firm, which surprised the world earlier when it first announced a free gigabyte-sized email account. While web-mail is not normally considered a part of CC, the two nevertheless share many characteristics. The only real difference is that web-mails don’t translate to any real server workload: they are essentially the computer equivalent of couriers, only with speedier legs and a really big mailbag. So from a search engine’s point of view, it is not a huge sacrifice to give out spare storage space, since it usually has quite a bit of it, reserved for caching (a temporary record of every page on the internet for reference) and index.

But search engines do require processing power: all the crawling (reading everything on the web and making notes of it) is a never-ending, ever-growing task. Google didn’t mind, having taken to the task as an investment, since it figured that CC is the future and it doesn’t hurt to let everyone have a taste of what is to come.

The personal edition took off. For the majority of computer users who deal with office documents day-in, day-out, office work became a lot more mobile. There was no need to keep files here and there, perhaps on a thumb-drive to take home or somewhere in the abyss of the company Intranet, and upgrade the corresponding software for good measure. Your data and your tools are now all accessible from anywhere.

But compatibility remains a thorny issue. While everything works with one another within the realm of Google Docs, the rest of the world is still struggling with Microsoft Office’s legacy. With the exception of Adobe PDF and possibly XML, all attempts to define open standards for office documents have gone nowhere. “Supporting .doc” is one thing, but ensuring WYSIWYG (What You See Is What You Get) is quite another, especially when we are working across platforms.

This is where we see the challenges and potential of SaaS in their full glory. Students and individuals are clamouring for Google Apps, but its subscription-based business edition still has the feel of an experimental knick-knack next to other established proprietary systems. It remains to be seen whether these strangleholds will be released by CC or open source alternatives.

1.5.2.3.: Clients

Throughout this analysis, we have neglected one vital component of CC: the front end, where the human user comes into contact with the vast and hidden operation. Most of the time, we have a cloud client comprising simple hardware loaded with basic software. The first example that comes to mind is your personal computer – a rather standard piece of equipment with an Internet connection. It handles documents and web pages with reasonable speed. And it comes with an operating system and a browser. You might have paid for them when you bought the computer (if it’s running Windows or Mac), or it might be free, open source ones (Linux usually).

That’s your cloud client there. Of course, other options are being rolled out as we speak. A mobile phone running on a scaled-down OS, say Android, could also access the Internet on a browser through a 3G or WiFi connection. Depending on your application, it might run CC directly, or through an additional interface in the form of a small application which sits in your device. The application is an interface which doesn’t actually do the job or store the data; it simply takes your order like a waiter and brings you the food, so you don’t have to shout to the chef through a dumbwaiter that is the standard mobile browser.

In this aspect, Google again takes the lead with two products – Android and Chrome. The former is a mobile operating system that is available on many mobile phones, while the latter is a browser metamorphosing into an OS. The two projects target very different devices at the moment, which results in incompatible implementations: Android uses Java, C and C++ programming languages, while Chrome uses HTML, CSS, and Java Script. But the two might be bridged in the future, and in any case they serve parallel functions in the next few years.

Android is a foundation, which means it doesn’t do anything for users per se. But a developer can build an application according to Google’s specifications, which would then run smoothly on any Android phone. An application’s internal demands (“Where did the user save the data? Which wire goes to the buzzer?”) are handled by the OS in a uniform fashion, which means developers can focus on making one good piece of code, rather than multiple versions for every new phone model.

iPhones may be all the rage at the moment, but scripts have to be written specifically for it, and most importantly, their sales are controlled by Apple. With Google, however, an application works for dozens of models from different manufacturers in the Open Handset Alliance, and it can be sold without limitations. With the advent of amateur programming, made possible in collaboration with Open Blocks Java Library through the new App Inventor software, an explosion of Android applications is imminent, in the same way Geo cities and Dreamweaver paved way for a rush of personal web pages in the nineties.

But Chrome holds bigger promises still. When it was first released two years ago, the market was already saturated with Internet Explorer, Mozilla Fire fox, and Opera users. Chrome managed to scavenge a respectable 10% by virtue of speed and accessibility. But on the surface, it’s just another browser.

The key is that it is built on a different philosophy, one with CC at the core rather than the adjunct. When browsers were first invented, web pages were little more than text plus hyper-link. Now, we have scripts (little snippets of programs), perhaps a plug-in to render videos, or even a full application written in Java. Instead of having an all-purpose toolbox on an all-purpose work bench, Chrome’s WebKit engine isolates the individual processes and pages, increasing stability and security in the process. Independent auditors assert that it is the fastest browser in existence.

The reason Google invested in this project is hardly philanthropic: it expects such a huge growth in CC application patronage, whether through themselves or other suppliers, that the front end is going to become a bottleneck. Its interface is also designed with applications in mind: you click on an icon that would load up, say, Google Maps, instead of typing in a URL. The interface now has both the speed and feel of a desktop software.

As a matter of fact, since the browser is by far the most used piece of software, we might do away with the rest altogether. For those who are satisfied with web applications that are presently available, and have no need for any locally installed programs or storage space, they might be able to switch to a Chrome OS in the near future.

While it seems rather drastic to purge your computer of everything but the browser, there are solid advantages. Since the browser is all there is to the OS, and no other services need to be set on standby, booting up takes much less time. Without local data storage, both power consumption and the risk of data loss is minimized. Of course, it can’t be helped if you lose your login details – indeed it might be worse than losing your old-fashioned laptop, since a login means both data and identity: we will come to that later.

1.6.: Public and Private Clouds

Now that we have established the technology itself and its business applications, it is time to look at CC in the wider context. Until now, our focus has been on services provided by individual firms to public subscribers. Each provider runs its own farm.

This model, which we might call a public or external cloud, works most of time for casual applications. Uptime is usually acceptable (and who can complain about monthly interruptions when you paid so little for it?), and standard security measures are implemented across all users. The average subscriber, prone to using pet names for passwords, isn’t going to care about brute-force attacks.

Enterprises and government cannot afford such a slip-shod approach, when privacy, security and policy compliance at stake could mean enormous financial loss. Some data centres specify “five-nines” uptime (99.999%, or five minutes of downtime every year). Others demand a very high level of data redundancy, so that even if the majority of its data banks are irrevocably destroyed, the entire set of data is still intact.

While public clouds are unlikely to satisfy these needs, CC as a whole remains an attractive option: after all, the infrastructure is immensely capable, only unsatisfactorily implemented due to costs. Why don’t they build another amongst themselves?

And so we have community clouds: a makeshift answer for demanding customers. If they can agree on what they want precisely, just a few organizations can share their infrastructure so as to realize the benefits of cloud computing. The costs are higher, naturally, since they are spread over fewer customers, and capital expenditure is inevitable. But benefits in cloud computing such as scalability could conceivably outweigh the financial burden.

Of course, any demarcation we draw in the cloud tends to get muddled up. What if a firm wants the best of both worlds – public clouds for non-critical tasks (e. g. word processing), and community clouds for sensitive data (e. g. customers’ credit card numbers)? A firm doesn’t have to commit to CC services all at once: the transition might be a gradual one, involving different vendors so as to compare and compete. Take a website with customer login for example: the web server could go on the cloud while the database remains in-house. Meanwhile, the data on both could be duplicated onto one another from time to time. Hybrid CC, as this mode might be called, is by far the most popular option for first-time users.

(Despite Moore’s Law and his optimistic forecast of exponential growth in computational technology, we haven’t quite reached the stage of private clouds yet. The term is controversial in any case: unless the user is so large as to consume enormous IT resources, there is simply no economic case for buying, building and managing a private cloud. Of course, this doesn’t prevent vendors from slapping the term on everything they sell: virtualization, for example, has been around for decades. A tangled jumble of PCs and VPNs does not make cloud.)

1.6.1.: The Inter-cloud

Just to complicate things, a cloud-of-cloud or Inter-cloud is coming. Bluntly put, it remains a hopeless fantasy as of 2010, just as 3G was back in 2001. We should be there in a few years, and there are no obvious obstacles, but the devil in the details should prove to be a veritable opponent.

This term originates from a description of the Internet: “a network of networks”. When ARPANET, the very first internet, was set up in the late eighties, it had a definite function: facilitate the exchange of information amongst research institutions. Each of them had many computers which were wired together to form a local network, so that researchers can share data across terminals without having to fire up floppy disks. But what if the colleague was employed by another institution with a separate local area network?

Hook them up, naturally. But when more and more institutions rushed to this development, a point-to-point approach became infeasible. A packet of data can’t just wander around, hoping to bump into its destination by sheer chance. There has to be directions, signage and traffic management; hence, a network of network.

So what exactly does it mean to have a cloud of clouds? With internet, we have a superb framework for sharing information which essentially co-ordinates itself. In clouds, we share resources: storage space, memory, processing power, and bandwidth. Why can’t it extend to the entire world, just as the internet did?

This has been prophesied as early as 2007. From one machine, a person can access all other machines on the planet, utilizing them without even being aware of it. While it sounds wonderful, the point of doing so isn’t immediately apparent. Ever since online streaming superseded peer-to-peer video sharing, we can’t even fill up a hundred dollars’ worth of storage, let alone processing power.

It’s not about whether we have infinite demands, but the fact that we don’t have infinite supplies. Even a cloud as big as Google’s can easily be saturated by demands: peak loading could be alleviated but never entirely solved by CC. If Amazon’s can spare a few petabytes for the next five minutes or so, why can’t it do so for a suitable amount of compensation?

What works for the two of them could equally apply to others, big and small. Theoretically it is very much possible to link everyone up, but the challenges are legion: technical, financial, and legal. Yes, computation is just computation – as the mathematical scientist Turing proved fifty years ago, any computer can do the job of any other computer, provided that it has an unlimited amount of storage. But interoperability, quality-of-service, and even billing issues are severe hindrances to the Inter-cloud. If we can’t even solve the issue of paywalls satisfactorily (charging readers for access à la Murdoch’s Times – see how tepid the industry response was), we might never live to see the Inter-cloud in action, especially if the cost of information storage and computation drops sufficiently to make the whole thing moot.

1.6.2.: Blending the clouds?

A seamless hybrid isn’t within reach yet, but workarounds do exist.

If you manage a complex, multi-tiered application, serve a multitude of users with messy access rights, and implement on-premises databases, chances are you would be lured by the promises of CC. And rather predictably, you are going to face some serious hurdles. There will be a lot of reconfiguration and re-engineering: it eases your workload if both public and private clouds run the same platforms, and don’t expect too much with regards to environment standards like the Open Virtualization Format.

Once you make up your mind about migration to hybrid clouds, check whether you have the following tools: a security infrastructure that works in both clouds, a safe and cheap channel on which to access or duplicate data, and co-ordination systems that monitor the set-up from both ends. If so, you can begin with the simplest modules: something which is used by fewer users, or “stateless” ones, which are independent of the time and sequence of its colleague’s actions (in short, the loners and wallflowers).

Open source software has provided the foundation to many CC implementations, but it doesn’t translate to open standards. We can expect some well-documented APIs (typically published under a Creative Commons license), but then they are there precisely because there will be unique and non-interoperable features. The Open Cloud Consortium has been convened to form a consensus on standards and practices.

Happily, vendors are rushing to develop easier options. In 2012, we can expect to see the first clouds with blending designed in mind. Until then, an on-going effort standardizing configurations and policies on the existing foundation would help a lot when the final moment of truth arrives.

1.7.: Issues

When a password is all it takes to access everything – data, applications – a user had better make sure it is a safe one. By that we mean a sufficiently complicated one and hidden from view.

It turns out that the latter requirement isn’t as easy as it sounds. An intruder is armed with a key-logger (a piece of malicious software that records every key entered on a keyboard) could find vulnerable spots in a user’s computer where the code could sit without being noticed. Logging onto the cloud, the user has unwittingly handed everything over.

Authentication is but one of many scenarios where CC can go wrong. First and foremost are issues of security and privacy: the very thought that company or customer information is being actively transferred to another firm unsettles a lot of executives. Cloud operators naturally do all they can to alleviate the security risk, but the novelty of the situation means that mistakes will be made.

And what of legal obligations? Who actually owns the data? What if an operator’s oversight is aggravated by the user’s bad coding? As of 2010, the law books have very little to say on this, because the section doesn’t get written until someone instigates suit. As with other concerns, the Cloud Computing industry simply hasn’t got enough test cases.

1.7.1.: Privacy

This concern isn’t limited to the Internet, naturally, as we saw when NSA recorded 10 million phone calls made in the AT&T/Verizon network.

With clouds comes geographical mobility. But for the legally minded, this brings with it a boatload of issues: multiple jurisdiction, division of responsibility and even extradition treaties in case criminal charges are pressed, as with the BitTorrent case. What if we have a British user hiring Indian coders to migrate its database of Russian customers to a cloud-storage operator based in America with physical servers in Norway? And what if Chinese intruders stole it and sold it back to a Brit? In this day and age, this hypothetical transaction is hardly hyperbole. But previous class actions demonstrated that it is difficult enough to prosecute when all this happens within a single country.

Until we have a globalized legal industry that transcends physical borders, any CC-related disputes that stems from privacy concerns will have to be dealt on a case-by-case basis. There are few precedents on the books, and even the legal principles themselves have not been sufficiently established, as with the US Supreme Court’s debacle on the right to privacy.

1.7.2.: Compliance

Governments are woefully aware of this possibility. Anything too technical or complex even for bureaucrats to handle, the whole problem is delegated to a regulatory regime. So in America, users have to comply with FISMA, HIPPA and SOX regulations, while in EU the Data Protection Directive. The credit card industry, seeing that its liabilities for fraud can only rise as a result of CC, further specified the PCI DSS. Of course, providers have also come up with an industry certification, but what it actually entails is seldom publicly disclosed. In order to adhere to the guidelines, users might find themselves paying a premium for community clouds with restricted benefits.

1.7.3.: Security of Cloud Computing

Suppose you are willing to take the risk. You pick an expensive CC provider with every certification in the book. It doesn’t necessarily translate to extra security, though, if you set up your application badly. It is like building a heavily fortified gate and then leaving it unlocked, because you can’t be bothered with carrying keys.

It is not hugely difficult to set up a secure platform from the provider’s point of view. But since users are likely to build their own applications, providers cannot foresee the consequences when untested components are run on their servers. In order to co-ordinate security in cloud computing and efforts, developers from both ends have to exchange a lot of information. All the documentation in the world may not suffice to do away with a meeting in these cases, especially since practices differ in the absence of open standards.

For example, virtualization means that new machines with network interfaces are a lot easier to set up in the cloud. A traditionally configured firewall, accustomed to infrequent hardware upgrades, has no way of promptly knowing that a new unguarded gateway exists, let alone that it is responsible for its security. That is why it is important to check your fences by yourself, rather than putting all the eggs in your CC infrastructure.

1.8.: Conclusion

In a way, computation has gone full circle. When enterprise mainframes were first set up, multiple users accessed the same infrastructure via terminals. They are essentially a glorified I/O device (keyboard and screen plus very long wires) with no local resources whatsoever.

And yet we have the modern personal computer, the ultimate in-house solution, made possible by a lull in data exchange technology in the face of tremendous microchip developments. Now that the former has come back with a fiber-optical vengeance, are we going back to the old model?

Certainly, many tasks will migrate to the cloud. Data storage, for instance, has become indispensable in most cloud applications (think emails and backup servers: would you go back to 10MB POP3 servers or cassette tapes?) Similarly, where the personal web is concerned, connectivity is a major lure for application developers. But for business users, what is lacking is a standard, a broad consensus on how cloud servers should be run.

We certainly have the technical know-how, but CC is not about technology. It is about economics and management: how to use resources more efficiently, without compromising such requirements as security and reliability. In these aspects, we have no equivalent of that miraculous Moore’s Law, only Murphy’s version: anything that can go wrong, will go wrong.

IT professionals aren’t scrambling to update their resumes yet. It is anybody’s bet whether we will reach the nirvana of universal cloud, since we aren’t even sure if we’d like it in the first place. Unlike the case of the Internet, where the abundance of unlimited information is already a reality, computational resources will always mean money for the foreseeable future. If it’s cheap, we might want to keep something off the cloud, and if not, well what’s all this talk of paradise?

Links

Amazon Elastic Compute Cloud (EC2)

Amazon Simple Storage Device (SSS)

Amazon Web Services (AWS)

Google Apps for Business

Google CC Savings Calculator

IBM CC

Microsoft Biztalk Server

Microsoft Windows Azure Platform

Oracle CC

Salesforce CRM

Salesforce PaaS

Sun Cloud Developer Homepage

Workday Enterprise Business Management

Xignite On-demand Financial Web Services

Zoho Work Online

Atlantic

AWS = Amazon web services

Amis = Amazon machine image

Ebs = Elastic block storage

HPC = High performance computing

When registering in Amazon, after you give the credit card details, you get a call just for verification. You need to enter the pin number that’s displayed on the screen on your phone.

Amazon cancelations link

Amazon support page

By Sharath Reddy