What Is Gesture Recognition?

Gesture Recognition is the capability of a computer or tool to observe and understand human gestures as input. Such gestures involve hand movements and even writing symbols. The innovation behind gesture recognition includes using cameras or other sensors to catch the gestures and then using machine learning algorithms to evaluate and understand the captured data.

Gesture recognition can be pre-owned in a diversity of applications. It can be pre-owned in enjoyment settings such as video games and virtual reality. Gesture recognition also offers novel ways of interchange with interfaces, such as controlling an awarding or playing music by gesturing at a device.

Why Is Hand Gesture Recognition Important?

Gesture recognition is one of the most essential methods for creating a user-friendly connection. Gestures can flow any physical move or condition, but most generally from the face or hand. Consumers may understand gadgets between gesture recognition without physically touching them. Distinct innovation and machine learning may be pre-owned to identify and understand different human gestures, making human-machine conservation more achieved. It might yield conventional input tools like touchscreens, mouse pads, and keyboards outdated.

Hand gestures are a genuine way of interacting when one person is interacting with one another and therefore hand movements can be helped as a non-verbal form of interaction. Hand gesture recognition is a procedure of comprehension and categorizing meaningful movements by the human hands. Vehicles developed by the industry offer a large number of documentary systems and comfort features that the driver can control.

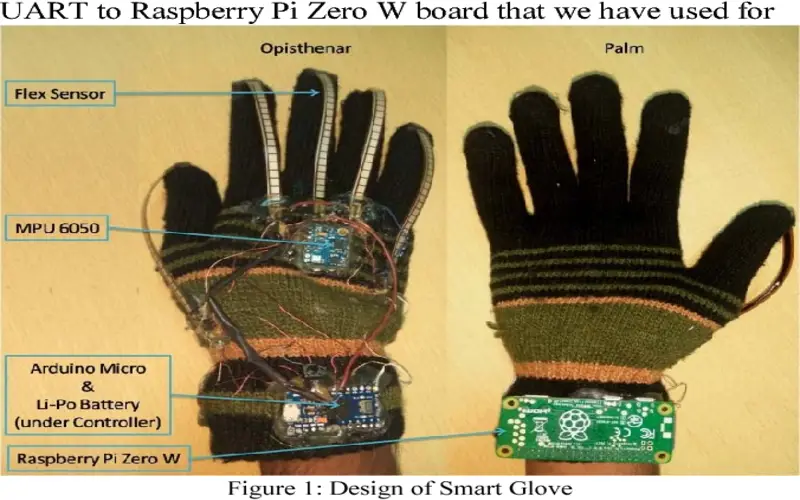

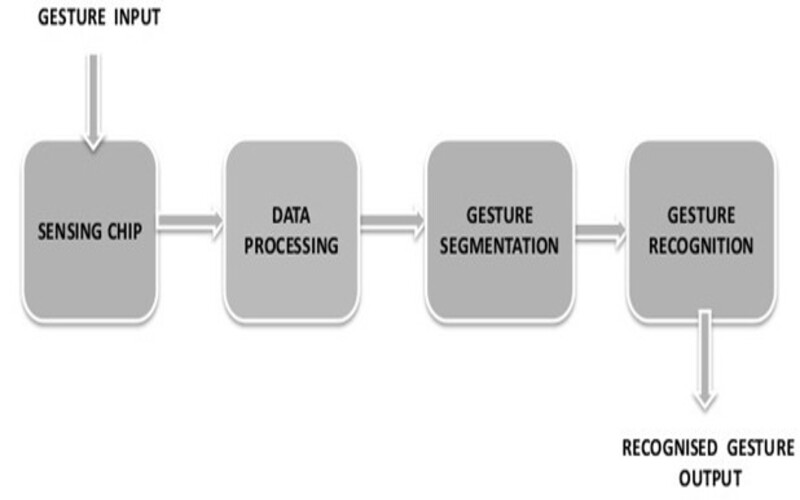

1. Sensor-based Hand Gesture Recognition

Sensors are used by a sensor-based gesture recognition algorithm to detect and assess human gestures. Multiple types of sensors can be pre-owned, such as cameras, invisible sensors, and accelerometers. These sensors detect data about the shift and situation of a person’s body or limbs, and the algorithms then use this data to identify particular gestures.

2. Vision-based Hand Gesture Recognition

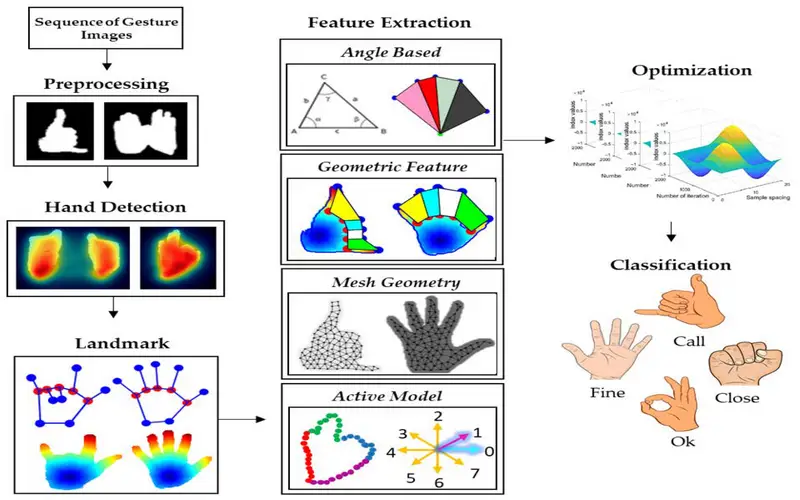

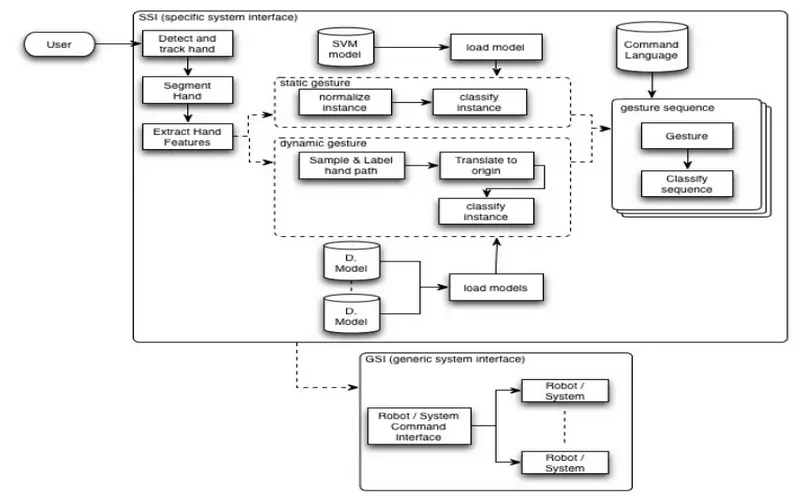

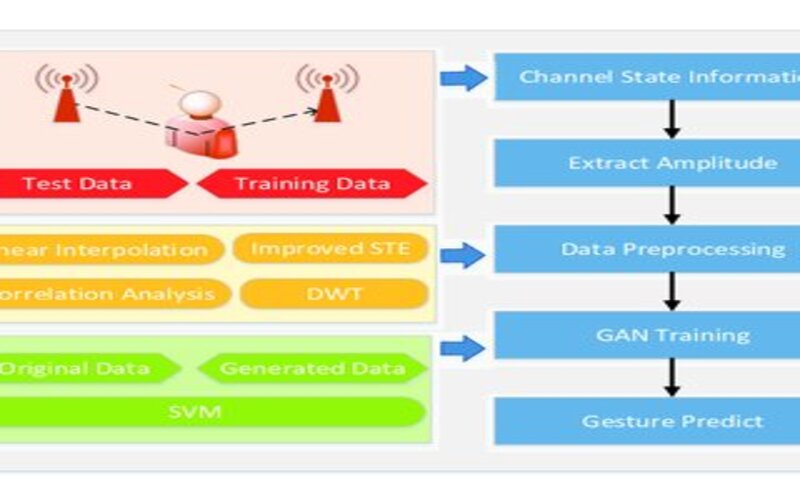

Vision-based gesture recognition system generally involves multiple vital parts like the visual sensor, which catches the images or video, A pre-processing element, which assembles the data for evaluation, A characteristics extraction module that removes related information from the data; a classification module that recognises gestures using machine learning algorithms; and a post-processing component that maps the classification module’s output to a specific activity or command.

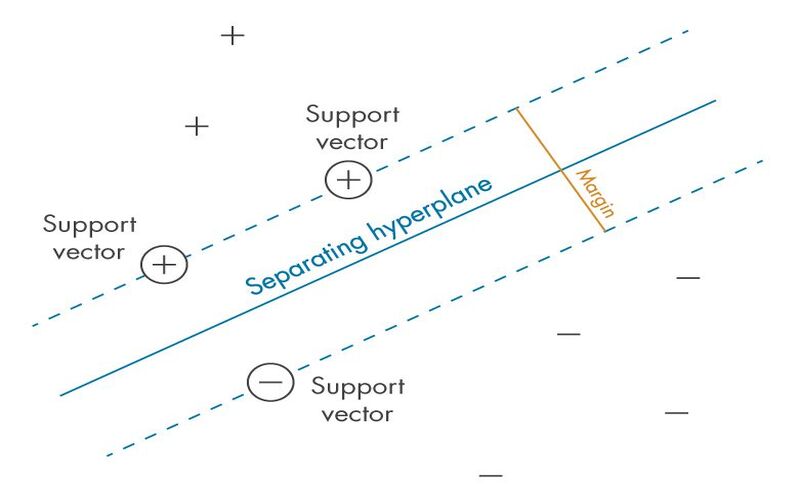

3. Support Vector Machine (svm)

SVM chooses the most excellent vectors and points that aid in the making of the hyperplane. Support vectors, pre-owned to present these extreme examples, form the basis for the SVM strategy. The SVM algorithm aims to establish the optimum decision boundary or that can integrate n-dimensional space into classes so that we may rapidly generate fresh data points in the future.

4. Neural Networks

CNNs are a set of deep-learning neural networks that are used to evaluate images and videos. Comprises an input layer, hidden layers, and an output layer. Backpropagation is pre-owned for maximization and flexibility. It trains and confirms the computer’s recognition of human gestures—page turning and zooming in and out instances of interactions.

5. Applications Of Hand Gesture Recognition Innovation

HGR innovation has begun to spike in multiple industries as modern computer vision, sensors, machine learning, and deep learning have produced more available and correct. The top four platforms that adopt hand tracking and gesture identification are automotive, healthcare, virtual reality, and user electronics. HGR innovation has begun to spike in multiple industries as modern computers and deep learning have made it accessible and correct.

6. Non-standard Backgrounds

Gesture recognition should bring excellent outcomes no matter the background. It should work whether in the car, at home, or walking down the street. System learning allows you to continuously teach the system to distinguish the hand apart from the background.

7. Recognizing Consumers Intentions

Examination of consumers shows that there is a general, intuitive sense of hand gesture ways and commands. For instance, creating your hand upwards means maximizing, and downloads means reducing. And likewise, moving correct means next, and generating left means previous. In practice, customers were asked to lower the level by sliding their hands down, making rotating movements with their fingers, dragging imaginary slippers from right to left or even touching secret buttons, similar to how they would use a remote control.

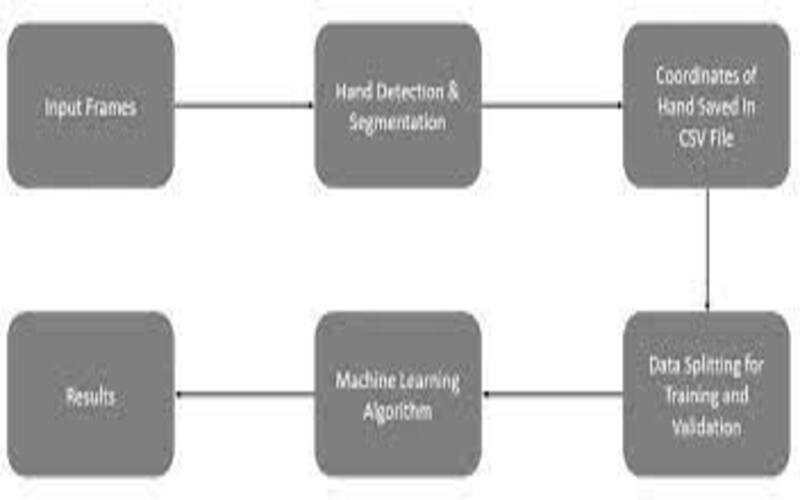

8. Building Of Model

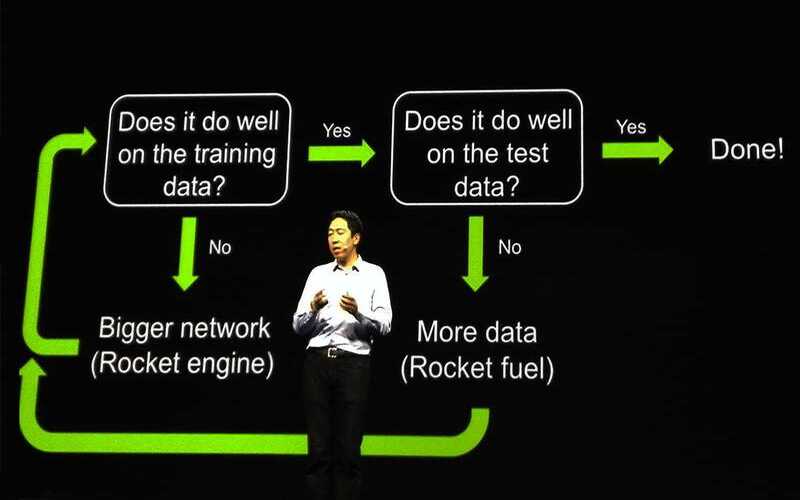

Different forms of machine learning will have different approaches to training the model, and most models will use different methods to begin. Algorithms require large quantities of high-quality data to be efficiently prepared. Various ways deal with the medicinal of this data so that the model can be as efficient as possible. The whole pattern must be correctly designed and organized from the morning so that a model fits the management’s particular conditions. So, the original step deals with investigating the patterns within the management. When the gathering of data is with it is then followed by the manufacturing of the deep learning model.

9. Training And Testing

Training and testing procedures for the classification of biomedical datasets in machine- gaining are essential. The professionals should choose carefully the strategy that should be pre-owned at every step. The model receives the training subset during the training stages, during which the model learns the image and its meaning. In the testing stage, the model is passed through the testing dataset to analyze the working of the model and to figure out the effectiveness of the forecast.

10. The Recognition Stage

The recognition stage follows, which is typically estimated as the ultimate stage of gesture control in VR systems. After an achieved feature extraction following the image tracking when the recognized features of a gesture are preserved in a system using complicated neural networks, or decision trees, the command or the meaning of the gesture is announced. The gesture is officially identified, and the classifier can join every input of a test movement to its gesture class.