Last Wednesday, Google had its annual I/O Developer Conference 2017 and the firm has announced that 2-billion devices are now running Android, which is a huge increase from 2015’s 1.4-billion. By comparison, Apple’s iOS is running on roughly 1.7-billion iPads and iPhones.

Android is not just on Smartphones. It is installed everywhere; from your car to your watches, televisions and other random devices. This is the reason why Google is flaunting new figures about all the Android offshoots. Android Wear, a wrist-based OS, is in nearly 50 different brand watches; Android Autos, a software for your car’s in-dash display, is installed in over 300 models such as Audi and Volvo; Android TV, Google’s smart TV platform, has more than 3K television apps and received 1-million activations every 2 months; and the Android Things, a platform for developing the Internet of Things, has thousands of developers worldwide.

Google has dominated the mobile world; nine out of ten Smartphones are shipped worldwide with Android OS installed on each. Extending the Android service is crucial since it is the gateway drug to Google’s services such as Gmail, Google Maps, YouTube, and its search engine. To expand the Android range, Google opted to extend its sights on almost everything – from staking a spot in your living room to outstretching people in remote countries.

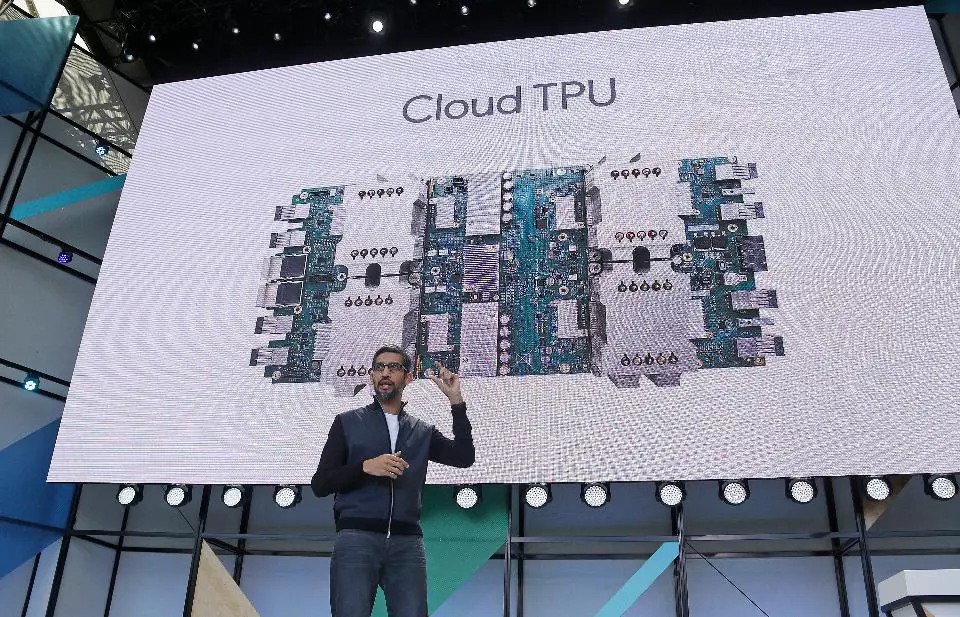

The keynote for this year’s Google I/O developer conference was addressed by Sundar Pichai (Google’s CEO), followed by some technical assembly all through the entire 3-day conference. He reiterated last year’s announcement about the Google’s transition from mobile to AI.

The first thing that he announced was the newest technology called, Google Lens, which allows the user to point the camera of their phone to objects and places and get significant details about them such as the name of the place, business listing, customer ratings, etc. This idea is similar to Google Goggles and the Bixby Vision of Samsung. You can also integrate Google Lens with Google Assistant such as, for mining images and get useful details that you can drop into your calendar, or to get help with translations.

Pichai also introduced the Cloud Tensor Processing Units (TPUs), Google’s 2nd generation of TPUs for Google Cloud. Google stated that this will speed up machine learning workloads, switching from simply inference uses to both inference and training in a rather confounding 180 teraflops system board. It also has the ability to integrate into pods of 64 TPUs, which can deliver up to 11.5 petaflops of max performance.

Meanwhile, Scott Huffman, Google’s VP of Engineering, Assistant, has spoken about some latest updates about the Google Assistant, ballyhooing the Assistant’s capability to improve as conversational. This Google Assistant is AI-powered and will be the company’s answer to Siri and Cortana. Also, starting now, it will be available on Apple’s iPhones, for the first time. Via Home, Google will soon add a hands-free phone call capability, which will allow us to call anyone from the U.S. and Canada, without setting up anything, not even a phone. Users can also receive visual responses to queries, which will be played on TV through Chromecast. For consumers, this AI strategy seems to be more compelling than of Facebook’s, but it’s quite unnerving because for it to work well, it needs heaps of personal information.

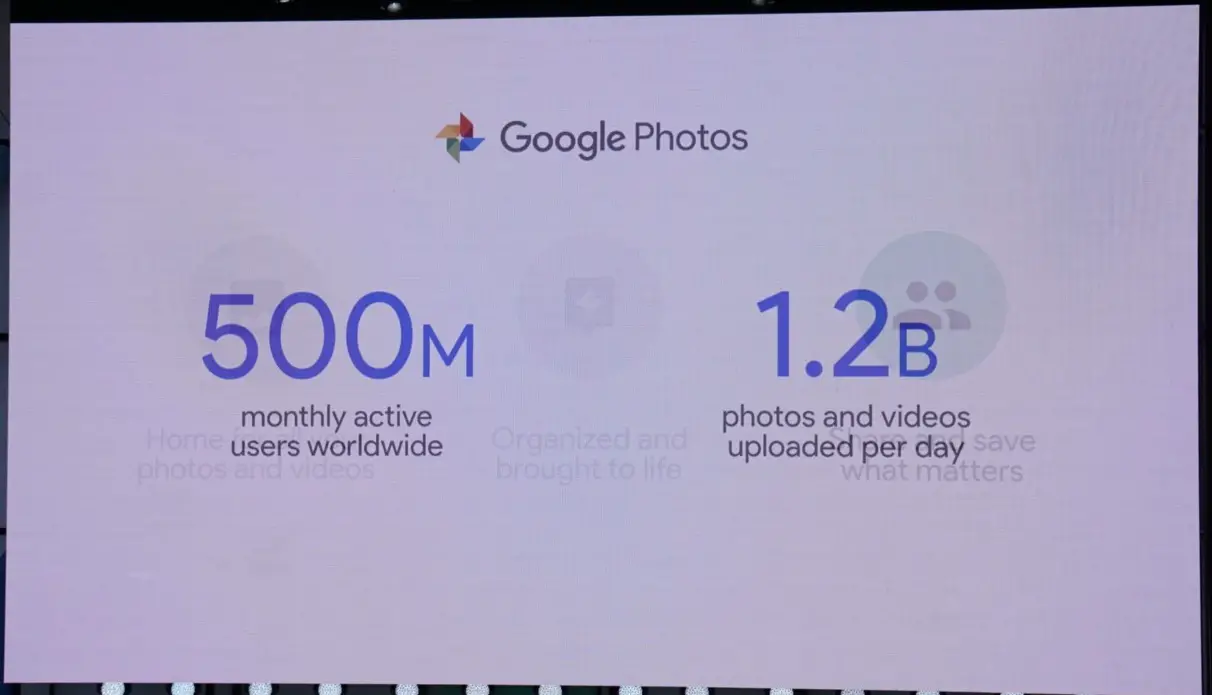

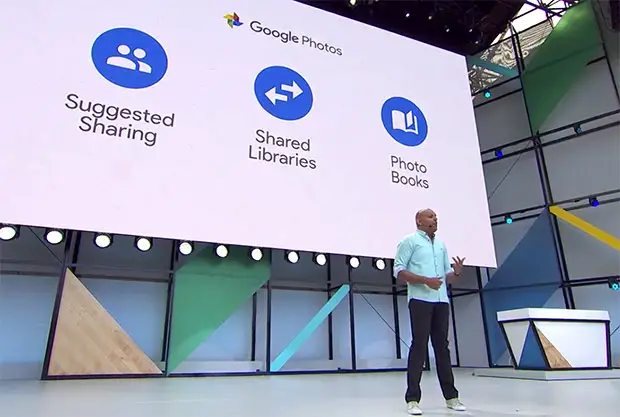

Anil Sabharwal, the VP of Photos, took some time on stage to discuss how far Google Photos had come since its release a few years back. There are about 500-million active users every month and about 1.2-billion photos and videos were uploaded every day, worldwide.

He then announced three new significant features of Google Photos. First is the Suggested Sharing that uses AI technology to identify your photos to share, and also make suggestions based on who is in the photos. With a single tap, you can instantly share your new short album to whoever you like, and they can even add theirs to their album automatically. This feature is available for iOS and Android users, and those without an account in Photos can still view.

Another new feature is the Shared Libraries, which serves the same function. Once you shared your photos, the Shared Library allows you to create and then share the images automatically. You may choose a time frame to start and share some or your entire photo album. Your friend, whom you have shared the library will be able to see all future photos to the shared albums automatically. You can also opt to share photos of specific people, or from a certain date.

The third feature of Google Photos is the Photo Books, which allows the user to order and design HQ Photo Books via Google Photos. Google Photos will then help the users to sort their photos and pick only the best by eliminating the duplicates and low-quality photos.

Also in the event was Android’s VP of Engineering, Dave Burke. He took the stage and informs everyone about the coming of the Android O. Burke stated that the latest OS is all about delivering a more “fluid experience” to Android devices while improving the security and battery life simultaneously. Included in what he is referring, the “fluid experience” is the picture-in-picture; a feature that will allow the user to do two tasks at the same time, such as making a video call while checking the inbox for messages. He also shared the newest Smart Text Selection, a feature that uses machine learning for recognizing specific text groups (such as an address), and set the selection without moving the infuriating text handles, which I think is better for the user who have big fingers.

Android O also features a faster startup time than the previous Android OSs. It also integrates several optimizations to boost the battery life. Burke also announced the TensorFlow lite, which is optimized for mobile. It is lighter and more compact run-time for running programs that will leverage machine learning.

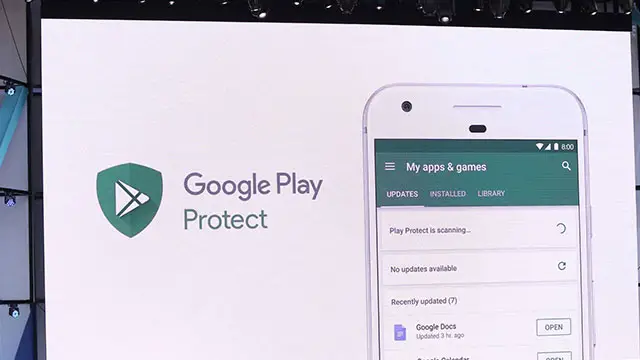

The Google Play Protect was also announced during the event. It is an all-inclusive security service for Android, which is built in each device with Google Play. According to Google, Play Protect will automatically scan and take appropriate action when a threat was detected. It also uses machine learning for updating and monitoring new and emerging threats.

There is also a preview of the Android Go, which is built to enhance the experience for entry level devices. Go is a lightweight version of the next generation Android devices with optimized Play Store and apps. The company is focusing on devices with low specifications and users with limited data connectivity and has the multilingual ability. It can be run on devices with 1GB or less of memory.

Mike Jazayeri, Director of Product Management, Daydream, has also announced their latest Daydream headset, with code name the Euphrates. The company stated that this latest headset will make VR more fun and will make your experiences easier to share, casting it out directly to your Smart TV. They also have their latest Daydream Standalone Headset, which doesn’t require a phone or PC but will take all the benefits of Smartphone VR. Google also has Tango, which allows the devices to track motion and recognize positions and distances inside the physical world. Through WorldSense, Google will be able to use Tango for VR purposes. For AR purposes, Google will use Tango to fuel up their latest Visual Position Service (VPS), which is better than GPS since it can help the device recognize its location even when indoors. This way, the visually-impaired can be able to navigate.

There are heaps of latest features and services that Google has unfolded in their recent I/O event. For more details, you may watch the full keynote of the event below: