A prompt is a piece of contextual, plain-language text pertinent to a given task. For instance, engineers can add the prompt “it’s” to the sentence fragment “worth watching” to create the Prompt “It’s [blank],” which will activate a large language model to suggest a movie. Engineers can reuse the model to predict whether the empty field should contain the words “recommended” or “not recommended” if they provide enough visual information.

What is Prompt-Based Learning?

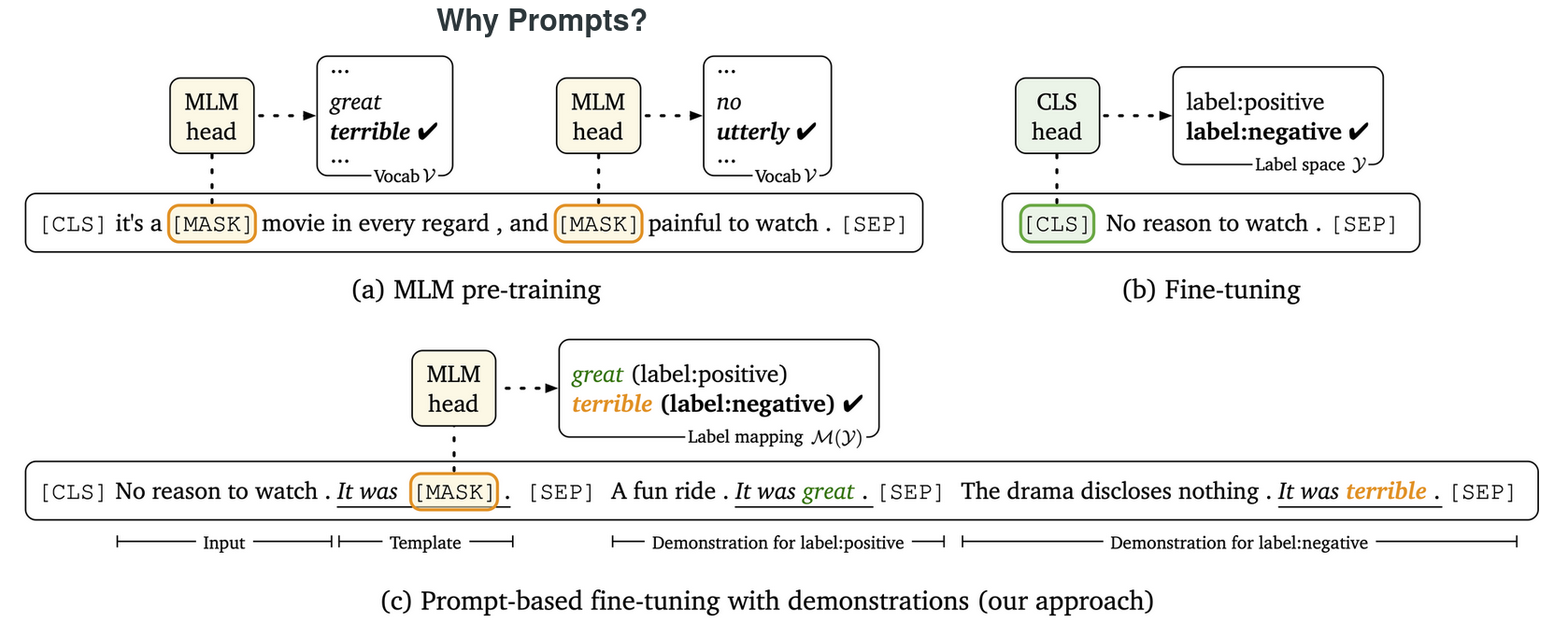

Prompt-based learning is a new class of techniques for training ML models. When prompting, users directly state in natural language the task they want the pre-trained language model to understand and complete. In contrast, conventional Transformer training methods pre-train models using unlabeled data before fine-tuning them using labeled data for the desired downstream task. A prompt is essentially a user-written natural language instruction that the model is supposed to follow or complete. There may be a need for multiple prompts, depending on how complex the task is that trained. Prompt engineering is the process of selecting the best series of prompts, for the desired use task.

Challenges of Prompt-Based Learning:- The transition between a model’s pretraining stage and its application to numerous downstream tasks is made possible by prompt-based learning. Nevertheless, prompt-based education has some drawbacks in addition to its benefits.

1. Language Processing

First, foundation models allow an AI system the ability to support tasks like language processing and computer vision. Additionally, it centralizes the NLP sector to develop human-like languages. Besides this, models like the GPT-3 can quickly adapt to new situations and understand novel approaches to solving linguistic issues.

2. Masking – Smart work v/s Hard work

Working smarter, not harder, when masking Either work arduously or perform wisely. With Deep Learning NLP models, nothing has changed. The hard part of the task is to improve the model’s performance by using a simple Transformer approach and a large amount of data. Models like GPT-3 can function this way because they have a staggering number of parameters. Alternatively, you can experiment with changing the training strategy to “force” your model to pick up more information from less. Models like BERT attempt to achieve this with masking. Once you comprehend that method, you can use it to examine the training of other models.

3. Visual Comprehensive

Additionally, foundation models in computer vision provide superior transformations of unprocessed data from various sources. In other words, they help machines understand the visual environment by identifying objects, recognizing actions, and recognizing images. Additionally, it is a remarkable advantage given that vision is one of a person’s primary senses. Therefore, it has been revolutionary to replicate or mimic this capability on a machine.

4. Human Engagement

Additionally, models frequently assist programmers in making better AI applications to improve user interactions. Although creating new AI for connections can be very time-consuming with advances in machine learning. Therefore, using foundation models offers development-related capabilities and solutions. As a result, it focuses the end-interaction user’s with human agency and imitates human manners.

5. Design Effective Prompt

Both manual and automated methods for making prompts have been planned by researchers; however, method call for: The person training for the AI model is familiar with how it operates. An experiment and error strategy. Only a few application domains have seen prompt-based learning research. More difficult prompt design techniques would be required for other parts, like text analysis, information extraction, and analytical reasoning.

6. The Right Combinations Of Prompts Templates And Answer

The prompt templates (like “It is”) and the provided responses (like “worth seeing”) are crucial components of prompt-based learning. Due to this, finding the best template and feedback to use together is still hard and involves a lot of trial and error. Despite these obstacles, rapid learning is quickly taking over as the next step in producing training foundation models. However, we must zoom out slightly to explain why.

7. Health And Biomedicine

Tasks involving healthcare and research into biomedicines require specialized knowledge, which is expensive and scarce. Consequently, foundation models in AI provide a wide range of opportunities for the review of massive data sets. Additionally, it gains experience using the data sets to improve the value and provide effective adaptation. Additionally, it helps businesses to enhance the experience for both patients and healthcare professionals. As a result, it improves overall capabilities for solving open-ended research problems and bottlenecks.

8. Fine-Tuning And Transfer Learning

The ability of BERT to be trained on various tasks and fine-tuned to particular domains is one of its main advantages.

9. Law

Law firms and associates frequently consult a wide range of data and expertise when discussing law and legality. Additionally, they must construct extensive, logical sections with texts and decipher murky legal fundamentals. When analyzing the data from various court cases, documents, and transformations, foundation models in AI are crucial.

10. Education

To meet the needs of students, the education sector constantly develops and improves. Additionally, it is a slight and complicated industry that enhances a student’s skills. They can still use crucial data across modalities even though the different sources of information might make it challenging to develop foundational models. It thus opens the door for foundation models to support the various tasks in the education sector.