In the human brain, we have approximately 100 billion neurons interconnected. Creation of artificial structure of these neurons using software form an artificial neural network. Deep learning is a part of machine learning and artificial intelligence that uses artificial neural networks that imitates the human brain in processing data. It performs sophisticated computations with enormous extractions of unstructured data in decision making, object detection, speech recognition, and language translation. Neural networks consist of artificial neurons or nodes with three layers – input, hidden, and output. The term deep refers to the number of hidden layers interconnected with each other that enables to give the final result. Here, we will learn the top 10 deep learning algorithms to perform computational tasks.

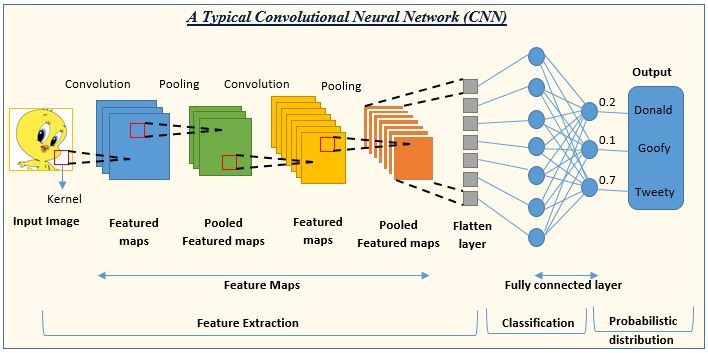

1. Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs), known as ConvNets, is a popular deep learning algorithm used for image processing and object detection. They have multiple layers that process and extract features from data. The Convolution Layer performs convolution operations using several filters, including Rectified Linear Unit (ReLU), performing operations on elements with rectified feature map output. The output goes to the pooling layer to get a single, continuous, linear, flattened vector.

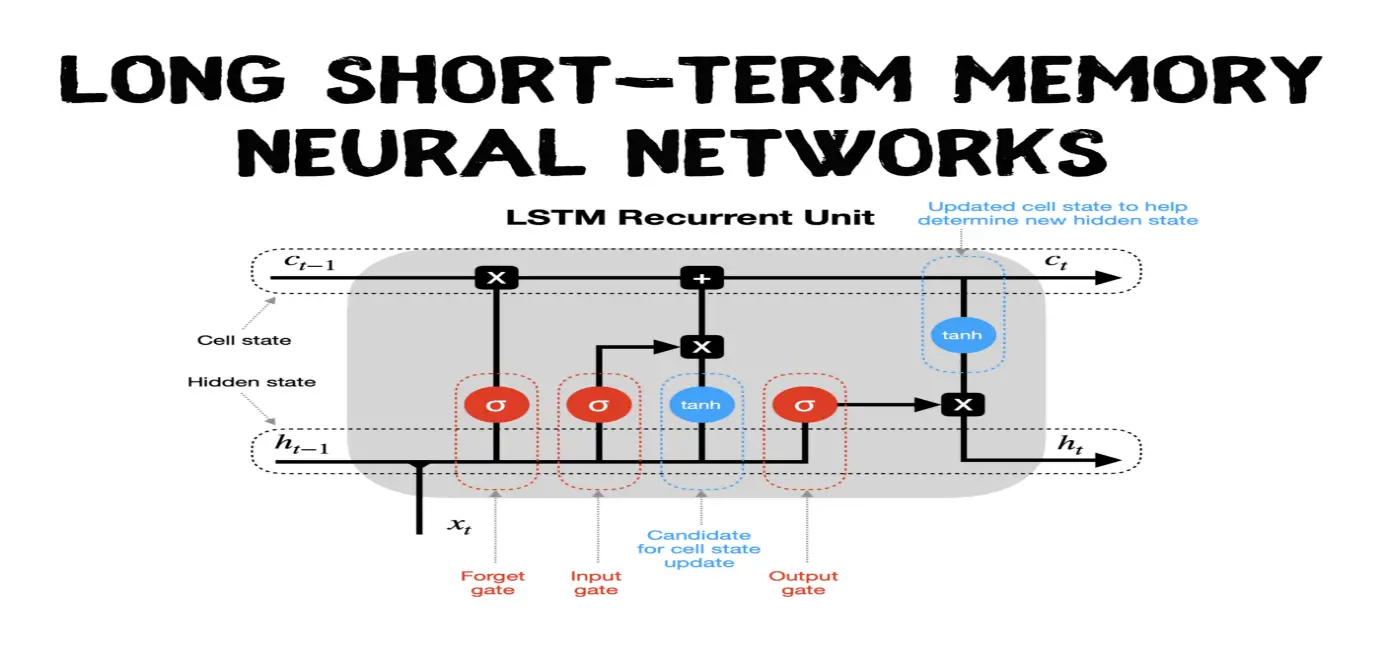

2. Long Short Term Memory Networks (LSTMs)

The Long Short Term Memory Networks (LSTMs) are a part of RNNs used for time-series predictions, speech recognition, language modeling, translation, music composition, and pharmaceutical development. It can recall or memorize long-term past information over time. They have a chain-like structure with four unique layers (input, input gate, forget gate, and output gate.) LSTMs forget the irrelevant parts of the data and selectively update the cell-state values.

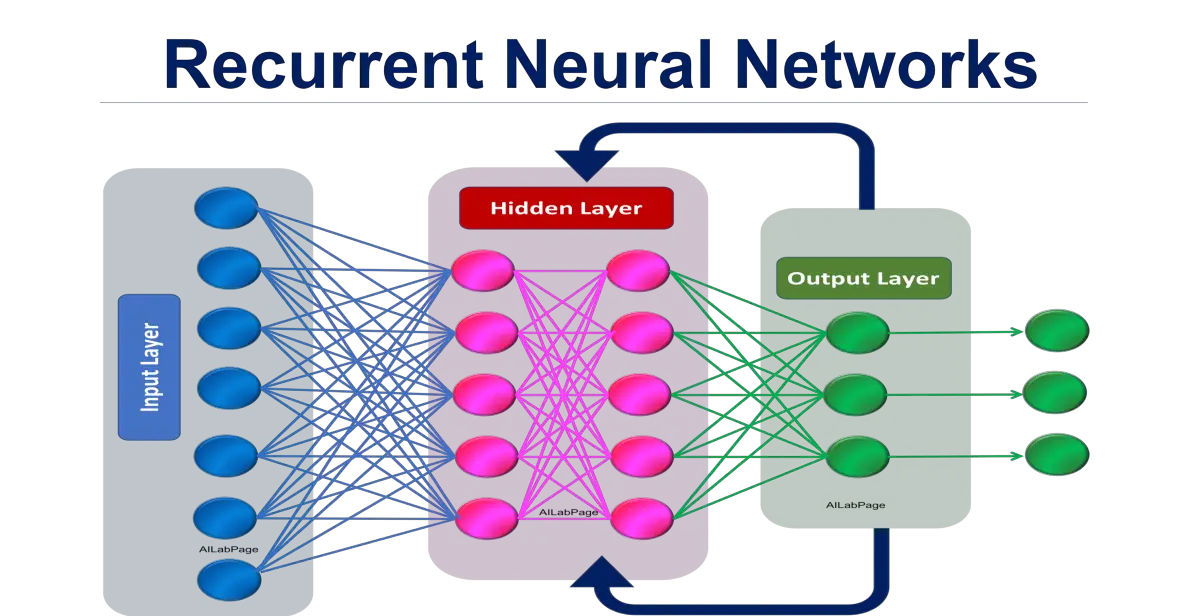

3. Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are artificial neural networks used for image captioning, time-series analysis, natural language processing, handwriting recognition, and machine translation. Outputs from LSTM become inputs to the current step that allows memorizing the previous inputs with efficient internal memory. RNNs can process inputs of different lengths. The more the computation, the more information you gather, but the model size does not increase with the inputs.

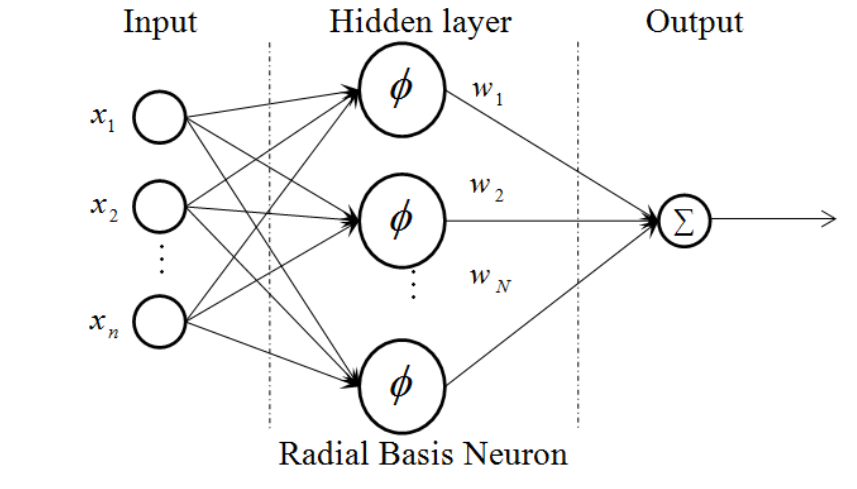

4. Radial Basis Function Networks (RBFNs)

Radial Basis Function Networks (RBFNs) are artificial neural networks used for function approximation problems, classification, regression, and time-series prediction. It is a feed-forward neural network that consists of input, hidden, and output layers. Data passes through the input layer to the output layer using the radial basis functions. The function finds the total sum of inputs. The output is a linear combination of the input’s radial basis functions and neuron’s parameters.

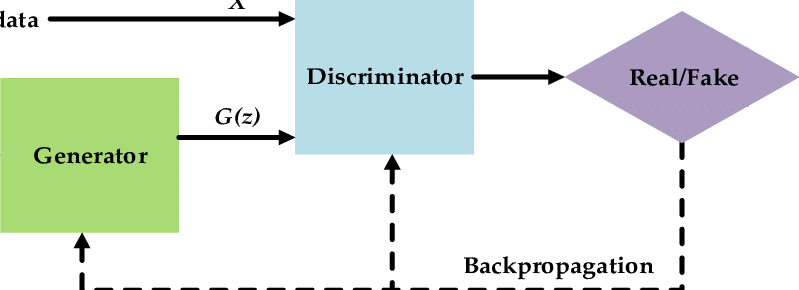

5. Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are generative deep learning algorithms that produce new data instances. Its use is to improve astronomical images and simulate gravitational lensing for dark-matter research. GAN has a Generator that generates fake data and a Discriminator that evaluates the real and fake information. It also creates realistic images and cartoon characters, photographs of humans, and renders 3D objects.

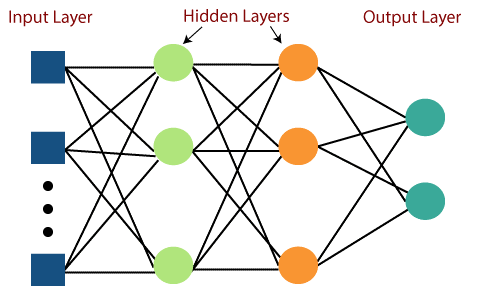

6. Multilayer Perceptrons (MLPs)

Multilayer Perceptrons are the most basic and oldest deep learning techniques used to build speech recognition, financial prediction, and carry data compression. It consists of the input layer connected with an output layer between hidden layers. The data passes from the input to the output layer via hidden layers. The neurons form a pattern and enable passing the signal in one direction.

7. Deep Belief Networks (DBNs)

Deep Belief Networks (DBNs) are deep neural networks used for image recognition, drug discovery, video recognition, and motion-capture data. They have multiple layers of stochastic, latent variables with binary values or hidden units. Greedy learning algorithms use a layer-by-layer approach to learning the top-down, generative weights. The weights determine how all variables in one layer depend on other variables in the above layer.

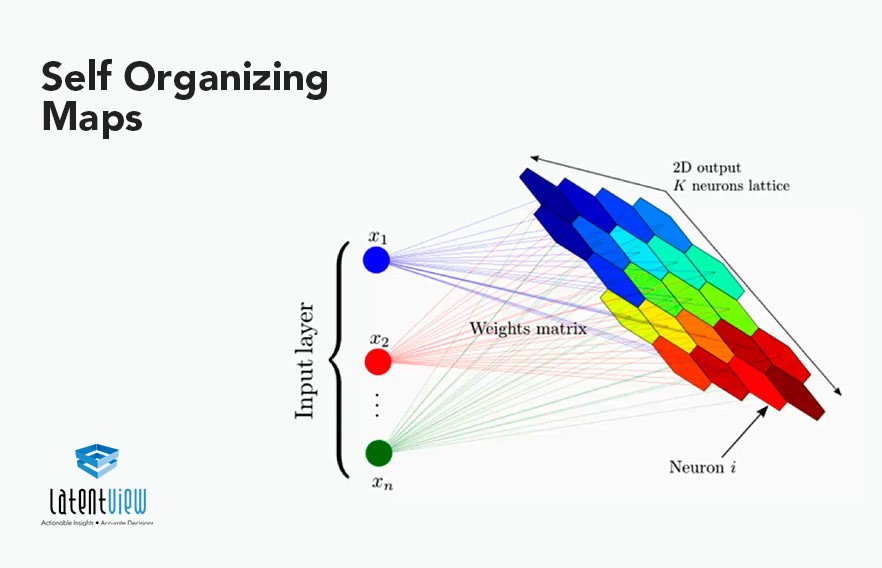

8. Self-Organizing Maps

Self-Organizing Maps (SOMs) are artificial neural networks that enable data visualization to reduce data dimensions through spatially organized representation. Data visualization uses high-dimensional data to solve problems that the human mind cannot easily visualize. SOMs randomly initialize weights for each node and choose a vector from the training data. Then they examine each node to find the most likely input vector. The most suitable node is the Best Matching Unit (BMU).

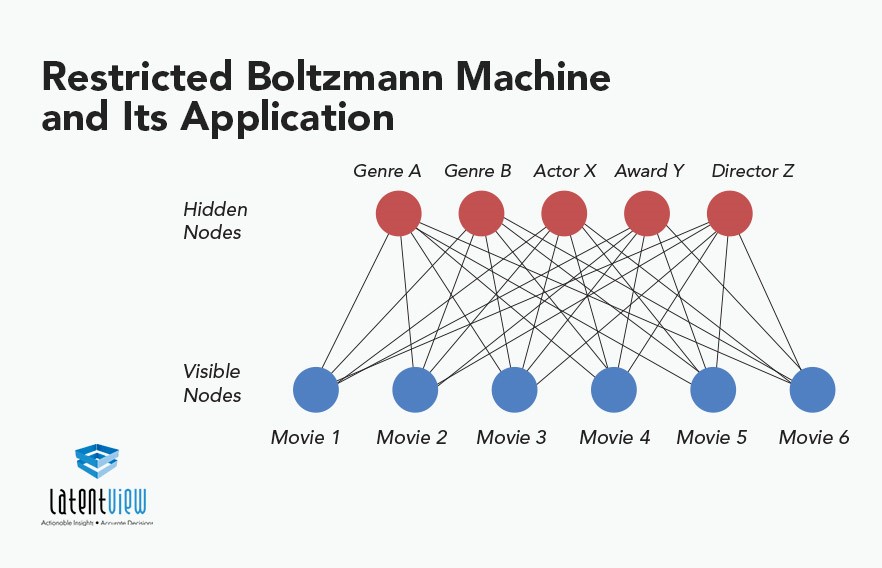

9. Restricted Boltzmann Machines (RBMs)

Restricted Boltzmann Machines (RBMs) are one oldest deep learning algorithms used for dimensionality reduction, classification, regression, collaborative filtering, feature learning, and topic modeling. They are the building blocks of DBNs. RBMs have two layers – Visible units and Hidden units. Each visible unit connects symmetrically with all hidden ones. There is also a Bias unit connected to all these units but has no output nodes.

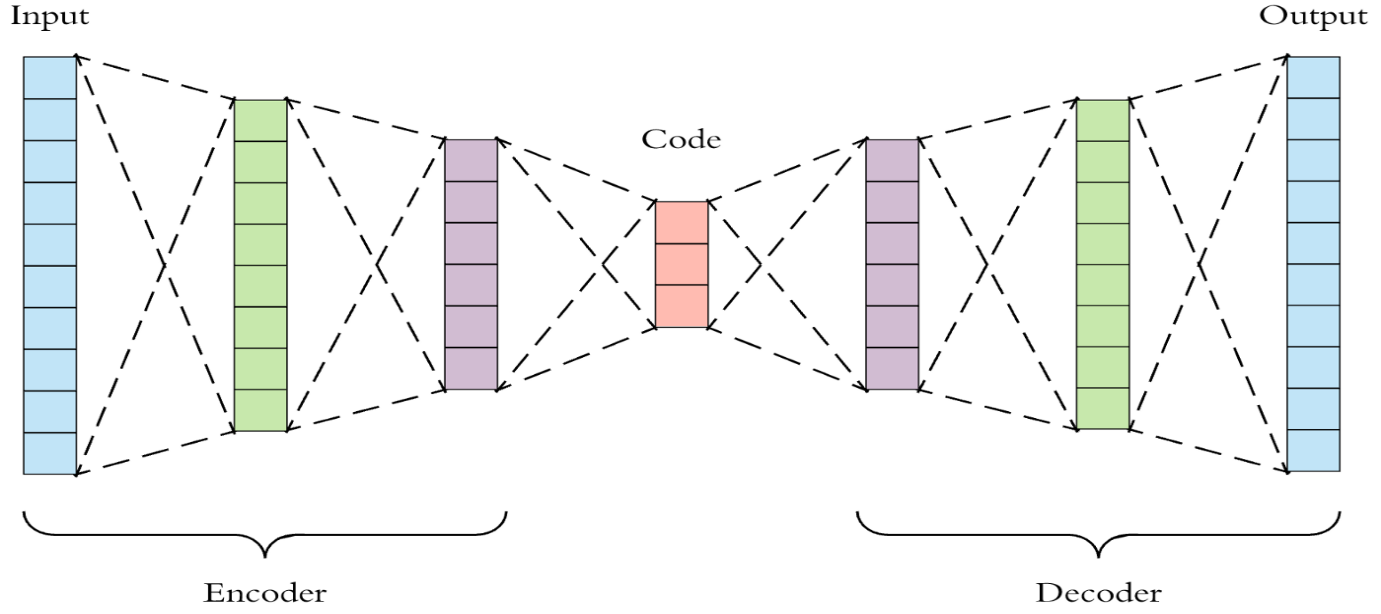

10. Autoencoders

Autoencoders are artificial neural networks that convert multi-dimensional data to low-dimensional data used for pharmaceutical discovery, popularity prediction, and image processing. It learns and understands how to compress and encode data efficiently. There are three components of autoencoders – the encoder, the code, and the decoder. First, autoencoders encode the image data and compress it. Then, they decode the image data and generate a reconstructed image.