Machine learning is a technique enabling machines to learn and understand certain patterns (relationships) between structured data to recognize and perform several tasks based on training. In simple words it helps machines be trained for certain tasks like writing essays, converting text to voice, or vice versa, recognizing a certain object in an image, and several other applications that are increasing day by day. Machine learning has three training types: supervised learning, unsupervised learning, and of course reinforcement learning (which this article focuses on). Supervised learning is a technique adopted that involves training the model using a well-labeled and structured dataset. On the other hand, unsupervised learning is a technique that uses the unlabelled and unstructured dataset, and the model itself has to understand and create relationships and patterns between the data. The last one which is reinforcement learning, is a type in which there is reward and feedback software, so whenever the algorithm makes a choice in the right way the agent rewards it, but it doesn’t make it understand the right path. As we have understood a topic we start with exploring the top 10 Python libraries which can help you create your reinforcement learning model.

1. KerasRL

KerasRL provides a useful interface between the popular deep learning library Keras and modular reinforcement learning capabilities. It enables practitioners to seamlessly integrate powerful neural networks within reinforcement learning agents. KerasRL implements common RL algorithms including DQN for Q-learning with deep networks, DDPG for continuous action spaces, and A3C for asynchronous training. The modular design makes it easy to leverage convolutional and recurrent nets for perception and sequence modeling. Pretrained networks can be readily incorporated into agents. KerasRL is ideal for those wanting to leverage deep learning and neural networks within end-to-end reinforcement learning pipelines. The simple APIs lower barriers to entry compared to full TensorFlow implementations.

2. Pyqlearning

Pyqlearning furnishes modular implementations of foundational Q-learning approaches including DQN and extensions like Double DQN and Dueling DQN to enhance performance. The library provides flexibility through both NumPy and PyTorch interfaces for representing neural networks. Pyqlearning focuses specifically on the Q-learning family of algorithms, making it easy to experiment with DQN hyperparams and architecture choices. The codebase is designed for modularity and education, with the implementation and components well documented. Pyqlearning is a good choice for those looking to focus specifically on Q-learning and DQN techniques rather than more modern policy gradient approaches.

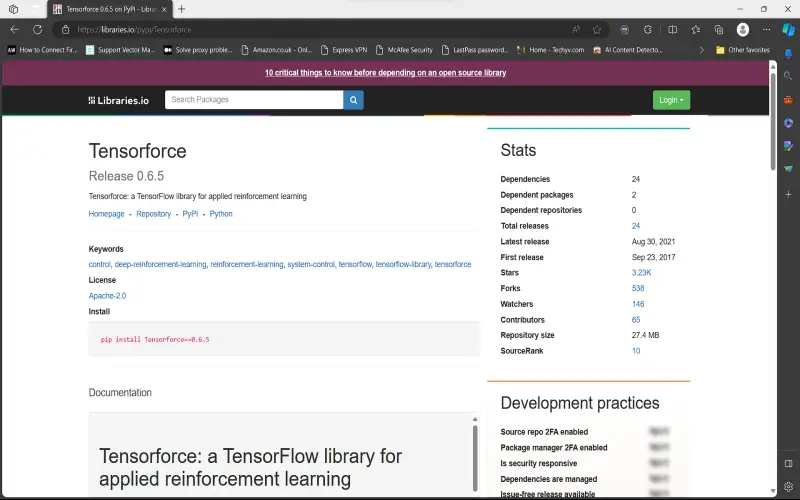

3. Tensorforce

Tensorforce provides a full-featured TensorFlow backend for implementing many popular RL algorithms. It includes modular configurations for DQN variations, policy gradient techniques like REINFORCE and Actor-Critic, and continuous control with DDPG. Helpful abstractions are provided for exploring different neural network architectures, policy distributions, and epsilon exploration strategies. Tensorforce makes it easy to leverage the power and flexibility of TensorFlow for implementing RL techniques. The library is well suited for research and education purposes given the modularity and documentation. It is lower level than some libraries but provides more control over the components.

4. RL_Coach

RL_Coach from Intel furnishes implementations of advanced modern algorithms including PPO, TRPO, Generalized Advantage Estimation, and more. Preset pipelines make it easy to deploy agents that can solve benchmark environments like Atari games. RL_Coach provides a good infrastructure for distributed training across clusters, making it suitable for large-scale learning. The high-level components and abstractions reduce the need for deep RL expertise. RL_Coach integrates well with existing Intel workflow tools like Analytics Zoo and Bigdl for productionization. The library is ideal for those wanting to leverage cutting-edge techniques on applied problems with minimal effort.

5. TFAgents

TFAgents provides reference implementations of popular RL algorithms built using TensorFlow and Keras. It includes options like DQN for discrete action spaces and DDPG+TD3 for continuous. TFAgents makes it simple to scale up training by leveraging Google Cloud TPUs and distributed TensorFlow runtimes. The library focuses on enabling large-scale distributed training for RL. It interoperates well with other libraries in the TensorFlow ecosystem. TFAgents is ideal for those wanting to train and deploy RL at scale using Google infrastructure and services.

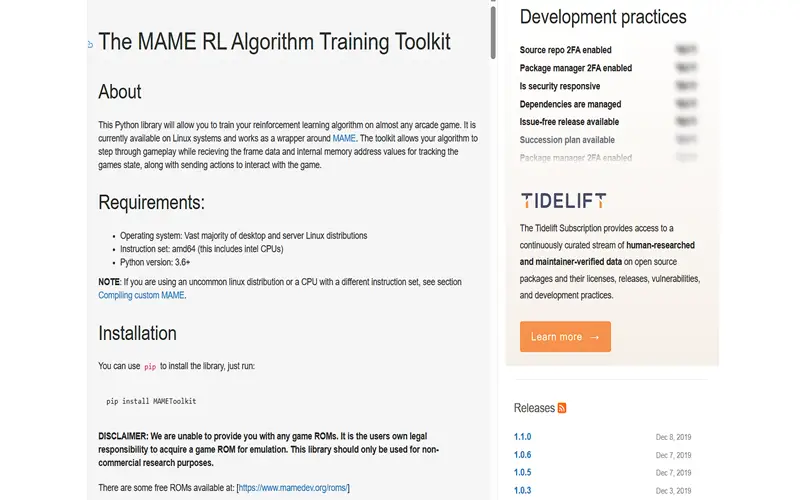

6. MAME RL

MAME RL specializes in developing agents to play classic arcade games using emulators like the Arcade Learning Environment. It provides implementations tailored towards mastering games like Pong, Breakout, and Space Invaders. MAME RL is useful for exploring the fundamentals of training agents from pixels to play. The environment configurations introduce constraints similar to real-world game dev. MAME RL is ideal for hobbyists and students wanting to gain intuition and experience with concrete game examples. It can serve as a stepping stone towards more advanced techniques.

7. MushroomRL

MushroomRL concentrates on modular Policy Gradient implementations including REINFORCE, Actor-Critic, PPO, and TRPO. It provides building blocks for designing policy-based agents. MushroomRL emphasizes flexibility through its component-based architecture. It provides utilities like action processors and replay buffers. MushroomRL enables directly contrasting different policy gradient algorithms on common environments. The library is ideal for research and education focused specifically on policy methods rather than Q-learning approaches.

8. Stable Baselines

Stable Baselines furnishes a unified interface to high-quality reference implementations of many RL algorithms. It provides tuned versions of popular techniques like A2C, PPO, SAC, and TD3 that integrate recent best practices. The stable reference implementations serve as benchmarks for algorithmic capability and sample efficiency. Stable Baselines emphasize straightforward usage for solving problems rather than novel research. The simple interface lowers barriers to entry for applying RL to real-world problems.

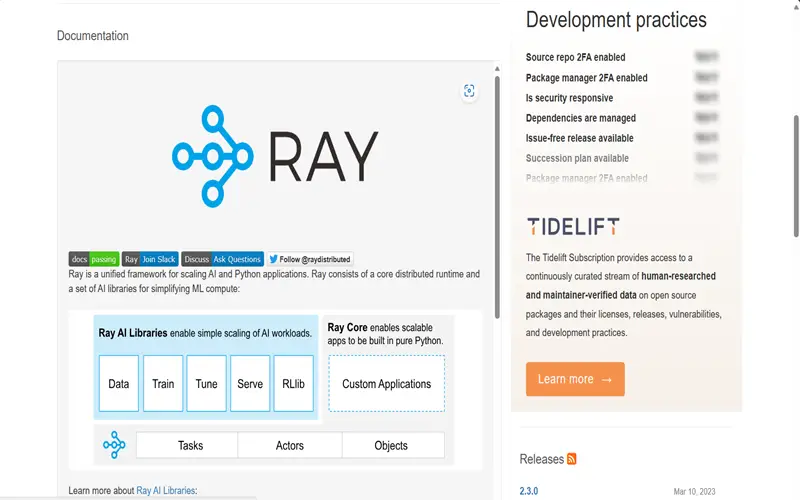

9. RLlib

RLLib provides scalable high-performance implementations of many RL algorithms for distributed execution. It includes integration with Ray for distributed training across clusters. RLLib focuses on enabling seamless scalability from laptops to cloud-based infrastructure. The library supports integration with hyperparameter tuning tools like Ray Tune for large-scale sweeps. RLLib reduces the challenges of productionizing and serving RL systems at scale. It is ideal for applied use cases requiring efficient distributed training and serving.

10. Dopamine

Lastly, we have Dopamine which provides carefully optimized implementations of DQN and Rainbow designed for rigorous research. It incorporates best practices to improve reproducibility like standardized logged metrics. Dopamine emphasizes correct implementation over ease of use. It serves as a benchmark for rigorous study of algorithmic details and hyperparameter choices. Dopamine is ideal for researchers wanting robust and validated implementations rather than simple abstractions. The library reduces reinforcement learning research engineering burden.