Virtualization Basics, Needs and Benefits

Points Covered

- Microsoft VirtualizationVMware and all other main virtualization companies and their products

- Comparing each with others if possible a table with their + or –

- History of each in short

- Benefits

- Involved hardware

- Involved software

- Deployments

- Software virtualization and hardware virtualization

Virtualization – An Introduction

Virtualization can be defined as a methodology or framework that helps to divide the computer resources into numerous execution environments using various technologies and/or concepts, like software and hardware partitioning, complete or partial machine simulation, time sharing, service quality, and emulation, etc. Although the concept of service quality belongs to an entirely different field, it is included in this definition as it is most often used in conjunction with virtualization. In fact, such technologies and concepts work together in complicated ways to establish interesting systems, which have their most important property as ‘Virtualization’. To put it in simple words, ‘virtualization’ is an intricate concept that is synergistic with a wide range of multi-programming paradigms. For instance, the *nix system applications are executed in a virtual machine of certain type.

However, there are some exceptions to this definition of virtualization. The term often refers to the act of breaking or partitioning something into several entities. But, when the ‘Virtualization Layer’ is used to make the N disks appear as a single logical disk, the term is used in an entirely different connotation. Similarly, the distributed computing virtualization (on-demand deployments, ad hoc provisions, decentralization, etc) of IT resources, like bandwidth, storage, and CPU cycles, is enabled by Grid Computing. The Parallel Virtual Machine Model (PVM), used widely for distributed computing, is nothing but an incredible software package, which allows a unique, heterogeneous blend of Windows and UNIX to be anchored together with a network and be used as one big parallel computer. Colloquially, Virtualization is something that has the ability to “abstract out the things”.

History of Virtualization

Multiprogramming was first used for spooling in early 1960s as a part of the highly acclaimed ‘Atlas Project’, which was a collaborative effort of Ferranti Ltd. and Manchester United. Apart from spooling, Atlas Computers also broke new grounds in areas of demand paging as well as supervisor calls, also referred as the ‘Extra Codes’. In fact, the main supervisor program had the SERs (Supervisor Extra-codes Routine) as its key branches. The SERs are usually activated using the extra code instructions or the interrupt routines of the object program. This Atlas Supervisor used, came to be known as the “virtual machine”. A similar machine was used to execute the user programs.

In mid 1960s, the milestone Project M44/44X was initiated at the Watson Research Center of IBM. The goal of this project was to evaluate and assess the up-and-coming concepts of the time sharing system. The entire project architecture was based on the principles of ‘virtualization’. The main virtual machine involved in the project was the M44 (IBM 7044), while all the other virtual machines were just the experimental replicas of 44X, the address space of which inhabited the memory hierarchy of M44. Its implementation was done using multiprogramming and virtual memory.

Several upgrades (7090, 709, & 7094) were provided to the 704 computer by IBM during the 1950s. The company’s system engineers eventually developed the CTSS (Compatible Time-Sharing System) on the IBM machines at MIT. The CTSS’s supervisor program had a direct control over all the trap interrupts. It also managed the scheduling of background and foreground jobs, console I/O, disk I/O monitoring, and program recovery and temporary storage at the time of scheduled swapping. In 1963, MIT launched its Project MAC, which primarily aimed at designing and implementing a time sharing system with properties better than CTSS. But despite all such new developments, IBM has always been the most significant driving force in the field of ‘Virtualization’. In fact, in the past few decades, a wide range of virtual machines were developed by IBM. Some of the most popular ones include the CP-67, CP-40, and the VM/370. Generally, the virtual systems of IBM are exact copies or replicas of the original hardware. The Virtual Machine Monitor, or VMM, is a vital component of virtual systems and run directly on the ‘real’ hardware. It was through this VMM that multiple virtual systems, each having its own OS, were created. Today, the VM offerings of IBM have been replaced by the robust and highly esteemed computing platforms.

Why Was the Need for Virtualization Felt?

Originally, the virtual machines were developed to overcome the drawbacks of typical multiprogramming OS, like OS/360, and 3rd generation architectures. The hardware organizations of these dual systems functioned in two modes – privileged and non-privileged. In former case, the software was provided with all the instructions, while in the latter, it was not so. A short resident program, called the privileged software nucleus or Kernel, was provided by the OS. The supervisory calls (SVCs or system calls) were made to privileged software nucleus by the user programs to get the privileged I/O functions performed for them. However, this conventional approach had its own shortcomings. For instance, this system allowed only a single bare machine’s interface to get exposed. That is, nothing other than a single kernel could be booted at the same time. Similar, running an un-trusted application securely or performing activities like upgrading, migration, debugging, etc simultaneously was not possible. Moreover, it was not possible to provide software with hardware configurations, such as arbitrary memory, storage configurations, and multiple processors, which did not exist. There was a need to overcome these shortcomings. Since necessity is the mother of invention, so this art of virtualization was developed as a solution to the above the mentioned problems.

The Benefits – Why is Virtualization So Important?

Here are some of the most important benefits of virtualization that made it so important –

- Used for server consolidation – the act of consolidating many under-utilized server workloads to just one or a very few machines.

- Saves a lot of money on server infrastructure management, administration, hardware, and environmental costs.

- Serves the need of running legacy applications, such as apps with System V IPC Key, which otherwise cannot co-exist in one execution environment.

- Provides isolated, secure sandbox to run the insecure applications

- Helps to create resource guaranteed execution environments and OS.

- Vital in simulating independent computers’ network and running several OS simultaneously.

- Allows performance monitoring and powerful debugging, while ensuring that no productivity is lost.

- Can be used to study the behavior of software by proactively injecting faults into them.

- Facilitates system mobility and applications by making software easier enough to be moved.

- Aids in academic experiments and research.

- Allows OS to run and co-exist on the shared memory multi-processor.

- Helps in creating random, subjective test scenarios to provide effective and imaginative quality assurance.

- Helps in retrofitting new features in the already existing OS.

- Provides binary compatibility, when necessary.

- Helps in co-located hosting

Basic Fundamentals of Virtualization – Emulation Vs Simulation

For virtualization, it is necessary to make use of a certain software layer, called the VMM (Virtual Machine Monitor), which can offer an illusion of a ‘real’ machine, to several ‘virtual machines’. The VMM can run directly, without the aid of a host OS, on real hardware, thus functioning as the minimal OS. It makes use of host’s OS-API for all its functions and can run as an application, directly at the top of the host OS. Then, emulation, set by the instructions, gets involved based on the degree of similarity between the architectures of the virtual and the host machines. As far as the execution of instructions is concerned, it is handled by software. The non-privileged instructions can be executed directly on real processors, while the privileged ones are handled by the software. Some virtualization products, like Simics and SimOS, use an entirely different approach of the ‘complete machine simulations’.

Majority of the architectures are designed keeping in mind the concepts of virtualization, but a typical OS and a hardware platform are usually not favorable for virtualization. A virtual machine ideally operates in a non-privileged mode as the privileged instructions might cause traps and instigate the system to take appropriate actions. For instance, architectures like IA-32 are not at all virtualization friendly. Its privileged instructions, such as STR, can become problematic when executed in non-privileged modes. The hardware managed IA-32 TLB and the software managed SPARC and PA-RISC, on the other hand, can manage kernels and address spaces of their virtual machines. Besides this, there are several other problems pertaining to system calls, page faults, and idle threads that might arise. The number of such issues depend entirely on the extent to which virtualization is carried out. Other optimal means of virtualization are still being researched.

Simulation or Emulation may be used by the virtualization frameworks as the host and guest architectures differ widely. As far as software is concerned, one system’s behavior is reproduced onto the other by an emulator. Software emulators are very common in gaming consoles and new and old hardware architectures. In the computing context, it is a simulator that serves as the ‘accurate emulator’ and helps in imitating a real system (simulation). The ARMn simulator for ARM processor core, the Synopsys’ VCS Verilog Simulator for ASIC simulation, and the simulator for PicoJava processor cores are good examples. Other than the usual simulators and emulators, there exist another category called the ‘in-circuit emulators’, which feature a unique combination of software and hardware to provide powerful and flexible debugging facilities while running code on the actual hardware.

Hardware Virtualization

The virtualization of operating systems or computers is referred to as ‘hardware virtualization’. In other words, it is a virtual system that conceals the computing platform’s physical characteristics from its users and shows an abstract platform instead. It is managed by particular software referred to as a ‘control program’, ‘VMM’, or the ‘Hypervisor’. In fact, the term, ‘hardware virtualization’, encompasses a whole range of similar technologies. The most common set-ups for hardware virtualization include Intel’s IVT and AMD’s AMD-V. Although these virtual systems are extremely useful in providing processor access to several users, they are usually very costly. They require dedicated software for functioning, which adds up to the costs. Besides, the chip’s maximum power for processing cannot exceed beyond a limit. Some of the most common approaches of hardware virtualization are as follows –

- Full Virtualization: Enough hardware is simulated by the virtual machines to enable the ‘unmodified’ guest operating system to run in absolute isolation.

Examples –Parallels Workstation, Virtual Box, Oracle VM, Virtual iron, Virtual Server, Virtual PC, VMware Server, VMware workstation, Mac-on-Linux, QEMU, Adeos, and Mac’s Parallel Desktop.

- Hardware-Assisted Virtualization: Architectural support to aid in building a VMM and helping the guest OS to run in absolute isolation is provided by the hardware.

Examples:VMware workstation, VMware Fusion, Xen, Microsoft Virtual PC, Parallels Workstation, etc. Some of the hardware platforms having integrated technologies for virtualization include IBM’s Power Architecture, AMD & Intel’s IOMMU, Intel’s VT-x, AMD-V x86, Hitachi’s Virtage, and Oracle Corp’s SPARC T3 and UltraSPARC T1/T2/T2+

- Partial Virtualization: Multiple instances of address spaces are simulated by the virtual machine. That is, virtual system in this case does not allow the entire OS to run at a time. Partial virtualization systems usually require specially designed address relocation hardware to allow each virtual machine to function in its own address space.

Examples: 1st generation CTSS, Commodore 64, MVS, and paging system of IBM M44/44X.

- Para-virtualization: Instead of simulating the hardware, virtual machine, in this case, provides special API to enable the modification of the guest operating system.

Examples: TRANGO, z/VM, Logical Domains of Sun, and IBM’s LPARs

- OS-Level Virtualization: Several secure and isolated virtualized servers are made to run on one physical server by virtualizing it at OS-level. Thus, both the host and the guest environments share similar OS.

Examples: iCore Virtual Account, Solaris Containers, Linux-VServer, and OpenVZ.

Virtualization Frameworks – Brief Overviews

Thus, it is now clear that virtualization offers multiple environments for execution in form of ‘virtual machines’ and every such virtual machine is an exact replica of the main computer. That is, each ‘virtual machine’ runs in absolute isolation under VMM supervision, but appears “real” to its user. Over the years, numerous variations and connotations have been introduced under the umbrella term of ‘virtual machines’.

Microsoft Virtualization – In 2003, the Connectix Corp. was acquired by Microsoft. The former was a renowned provider of different virtualization software for Mac and Windows based computing. VMware was acquired by EMC for $635 million around the same time. Soon after, a virtualization company named ‘Ejascent’ was acquired by VERITAS for nearly $59 million. HP and Sun Microsystems have also been striving off late to bring about breakthrough improvements in their virtualization products. Nevertheless, IBM has always been the pioneer in this area.

Following are brief histories and overviews of some of the most commonly used virtualization frameworks – projects and products – of different companies –

Microsoft Virtual Server

Microsoft has contributed a lot to the field of virtualization in the recent past with Windows NT, which featured multiple execution environments or subsystems, like VDM (Virtual DOS Machine), WOW (Windows on Win32), OS/2 subsystem, VM for 16-bit Windows, Win32 Subsystem, and POSIX subsystem. While Win32, POSIX, and OS/2 are primarily server processes, Win16 and DOS run as part of the VM process. All of them are largely dependent for their basic OS mechanisms on the Windows NT executive. VDM, derived from the MS-DOS 5.0 base code, is a virtual DOS that runs on virtual x86 platform. On x86, the privileged instructions were handled by a special ‘trap handler’. Windows NT was also capable of running on the MIPS, thereby necessitating the presence of ‘x86 emulator’ in the newer version.

Similarly, the older applications, like the DOS and Windows 3.x, ran on Windows 95 using virtual machines, such as the SVM that ran GDI, kernel, etc. The SVM (System Virtual Machines) featured separate address spaces for Win16 and Win32 programs. In 2003, when Microsoft acquired Connectix, the company offered virtualization as principal component of their Enterprise server offerings. Now Microsoft has begun to virtualize its applications. Their SQL Server 2000, File/Print Servers, Exchange Server, Terminal Server, and the IIS server have in-built virtualization capabilities and do not need any virtual support as such in the OS.

VMware

Established in 1998, VMware was acquired recently by EMC. Its first products included VMware Workstation (’99) and the ESX and GSX Servers (2001). Both, the GSX server and the VMware workstation, feature hosted architectures that need a host OS, like Linux or Windows, for functioning. These products act as VMM as well as the application running over the host OS. This ensures easy implementation, portability, and optimized performance. It also allows the VMM to function independently without requiring support devices and their drivers.

The main components of the hosted architecture of VMware Workstation include –

- VMApp (application at user level)

- VMDriver (host system device driver)

- VMM (Virtual Machine monitor developed by the VMDriver.

It is the VMDriver that is responsible to switch between the native and virtual contexts. The guest system then initiates I/O, which is trapped by VMM and then forwarded further to VMApp. VMApp then uses the regular system calls to perform I/O in context of the host. All the virtualization overheads are reduced significantly by VMware optimizations. Although the GSX server also features a hosted architecture like the Workstation, it is specifically targeted only for server applications and deployments.

Unlike the GSX server and the Workstation, the ESX server is capable of running directly on the hardware and hence does not require host OS. It makes available physical computers as sources of secured virtual server systems by means of logical and dynamic partitioning. ESX Server can run a number of virtual CPUs for every real CPU.

Bochs

Bochs, a highly customizable and flexible user-space or open source emulator, is used to emulate many I/O devices, x86-processor, and the custom BIOS. It has been written in C++ and is extremely portable. Its only disadvantage is that it has been programmed to emulate each and every I/O device and instruction and hence, is very slow. Bochs’ primary author reports around 1.5 MIPS on Pentium II (400 MHz).

Chorus

The kernel of Chorus System offers a framework of low-level, on which one can implement the distributing OSes. For instance, specific emulation assist codes of System V were used in Chorus Kernel to implement System V Unix on top of Chorus.

Denali

This OS allows the running of the un-trusted services in secured domains and is similar to the IA-32 VMM. In fact, Denali presents a processor architecture that is a modified version of x86 made for scalability and virtualizability.

Disco

Developed as a part of a project by the Stanford University, Disco is a VMM that sits on top of hardware and allows many virtual machines to work simultaneously. It is a kind of multi-threaded program of shared memory that has the ability to virtualize all the resources of original machine. The involved hardware include the main memory having adjacent physical addresses beginning at 0, specified devices like a disk, clock, console, interrupt timers, MIPS R10K processors, and network interfaces. Emulation is carried out by means of direct execution in real processor. Specified software are used to reload MIPS TLB and every TLB entry gets specifically tagged with address space identifiers. This ensures that the TLB doesn’t get flushed on MMU context switches. Device drivers, like SCSI, UART, and Ethernet, are added by Disco into the OS, which then intercepts the devices’ access from the virtual machine.

Ensim

Ensim, with its VPS technology, has been the pioneer in the field of OS virtualization on the commodity hardware. This technology enables the user to partition the OS securely in software, with absolute isolation, manageability, and service quality. Different versions of VPS exist for Windows, Linux, and Solaris.

FreeBSD

The ‘Jail mechanism’ of FreeBSD enables the user to develop an isolated environment by means of software. It uses ‘chroot (2)’ and every jail features its own independent ‘root’. Each Jail is confined to a particular IP address and has no access to services, processes, or files of other jails. One can implement this feature by making the different FreeBSD components, like system call API, pty driver, and TCP/IP stack, “jail aware”. ECLIPSE, created by Bell Labs in 1998-1999 has also been derived from the FreeBSD.

HP-UX Virtual Partition

HP-UX VPARs run their own HP-UX copy and offers application and OS isolation. They are created dynamically and have specified resources assigned to them. Several resource partitions can be created within every VPAR. The vPAR monitor sitting atop hardware assigns the hardware resource ownership to VM instances.

Similarly, the list goes on with several other virtualization frameworks, such as IBM’s LPAR, Sun’s Solaris, SimOS, Nemesis, UML, Simic, Hurricane, Mac, Palladium, VServer, etc.

Virtualization Companies & Products – A Detailed Comparison of Different Infrastructure Solutions

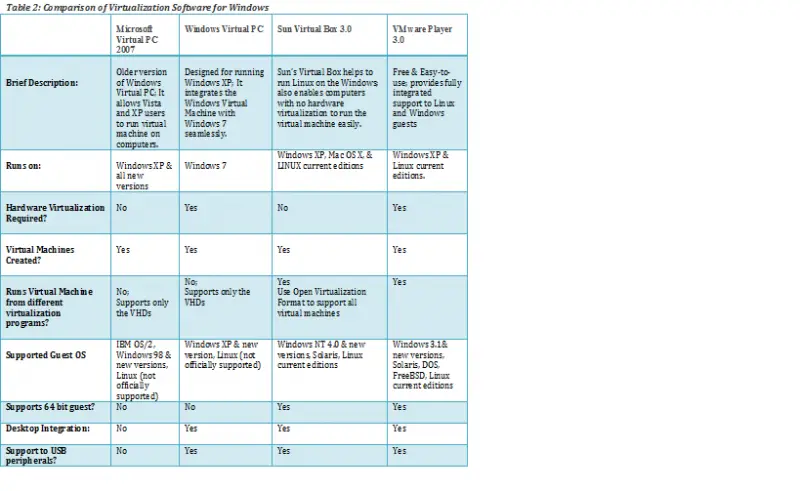

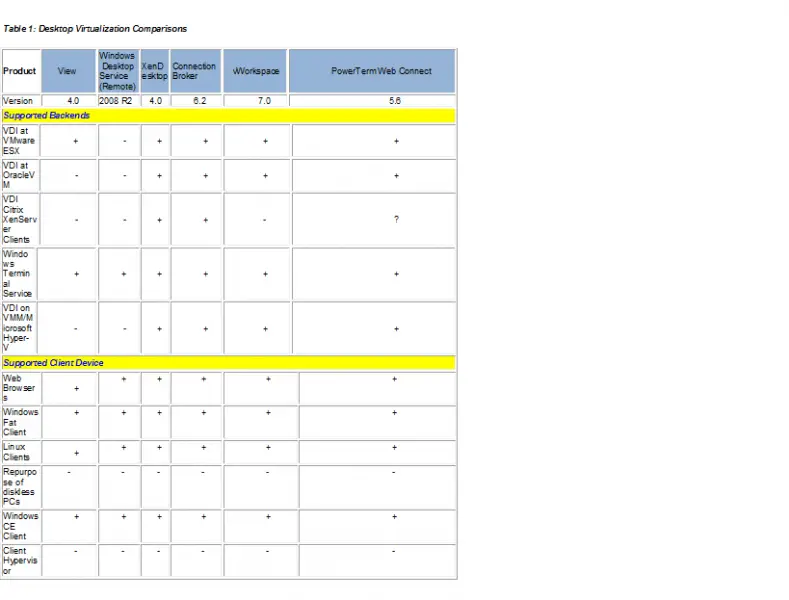

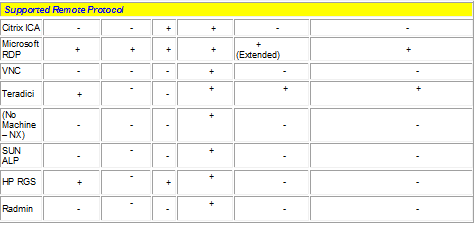

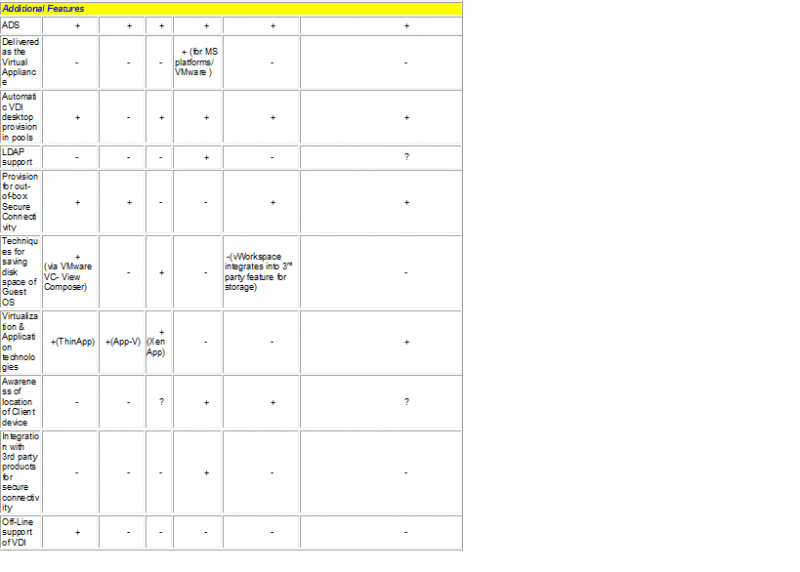

At present, a wide range of virtual infrastructural solutions is available for ‘x86’ platform. Currently, 6 major players can be considered in this market – VMware, Microsoft, Citrix, Oracle, RedHat, and Novell. They can be compared on basis of market positioning, hypervisor characteristics, VM characteristics, Host Characteristics, management characteristics, automation, and desktop virtualization. Following Table 1 will show you a comparison of 6 companies on the basis of some features of desktop virtualization.

Virtualization software: Virtualization software is necessary to allow multiple OS to run on a single computer. For example, virtualization tools, such as Parallels or the Apple Boot Camp, are needed to allow Windows to run on the Mac computer. Similarly, to enable Microsoft to run Linux and DOS, the Virtual PC program is useful. However, to execute virtualization programs, like VMware Player and Virtual PC, one’s computer must have the processor that can support hardware virtualization. A number of virtualization software are available for Windows. A detailed comparison of 4 major virtualization software is as follows –